This is the full developer documentation for DOCS

# Get started with Aembit API

> Overview of how to get started learning about and using Aembit API

The Aembit API v1 is a lightweight, RESTful API that enables clients to make calls to various API endpoints using standard HTTP URL syntax to perform Create, Read, Update and Delete (CRUD) tasks, and access different types of resources and information. Requests are made using URL and JSON request format, and responses are returned in easy-to-read JSON format.

Create, Read, Update and Delete operations are performed using the following REST verbs:

* **Create** - `POST`

* **Read** - `GET`

* **Update** - `PUT` or `PATCH`

* **Delete** - `DELETE`

Adhering to REST standards and best practices, the Aembit API was designed to be easy-to-use, with minimal complexity or sophistication.

Note

The Aembit API documentation assumes you already have a base level understanding of REST API concepts, terminology, and syntax. If you are not familiar with REST, or simply need a refresher, please see the [RESTful API Tutorial](https://restfulapi.net/) for comprehensive information about REST APIs and how to use them.

## Authentication

[Section titled “Authentication”](#authentication)

To ensure only authorized users have access to the Aembit REST API, Aembit enforces authentication to validate and verify whether a developer should be allowed to make requests. To provide flexibility to users, several types of authentication methods are supported, depending on the type of API client you want to implement.

The sections below describe the available authentication methods.

### Using the Session Access Token

[Section titled “Using the Session Access Token”](#using-the-session-access-token)

One way you may authenticate to the Aembit API is to use a session specific access token in your API requests. When you sign into your tenant, you will be assigned an access token for this session.

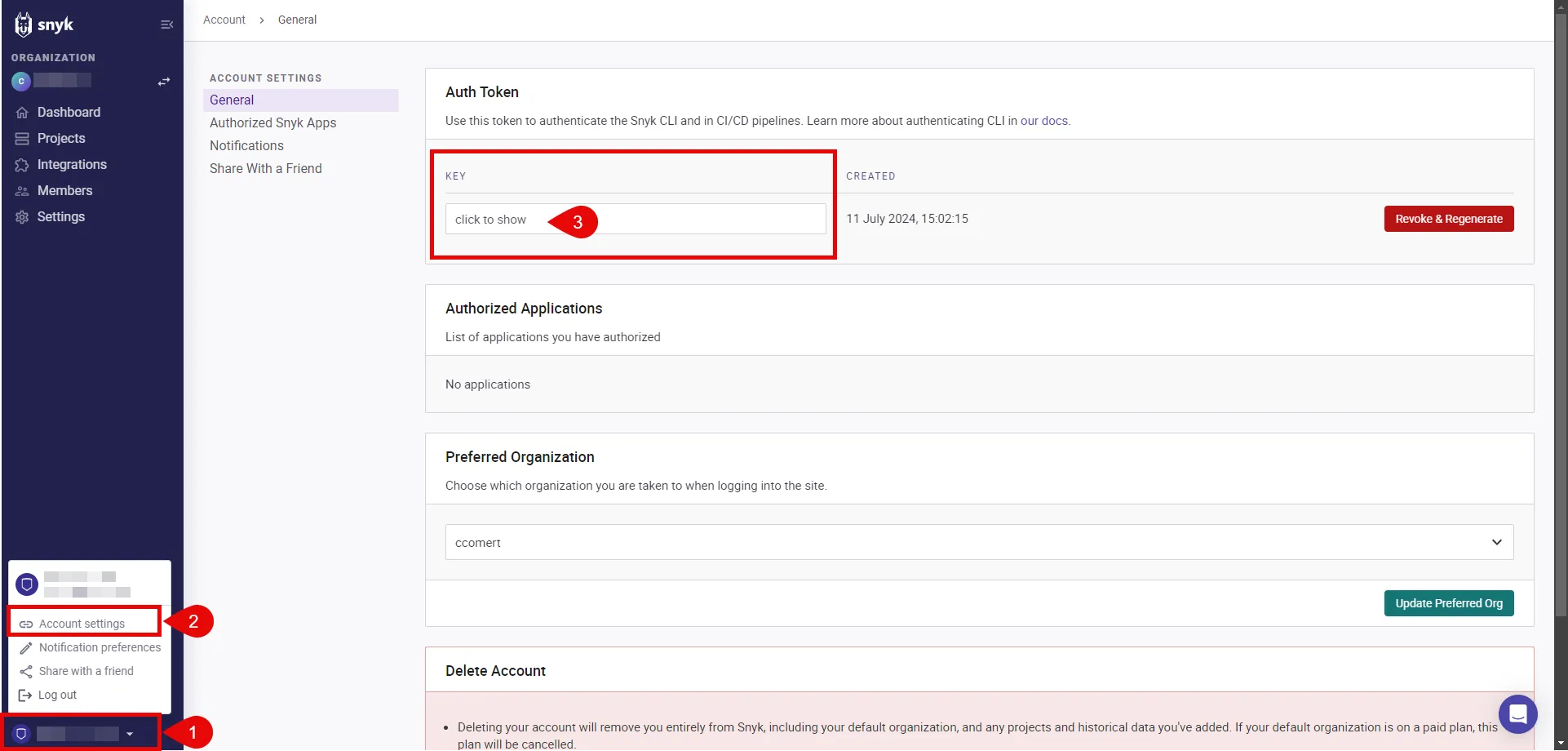

To locate the access token, follow the steps below.

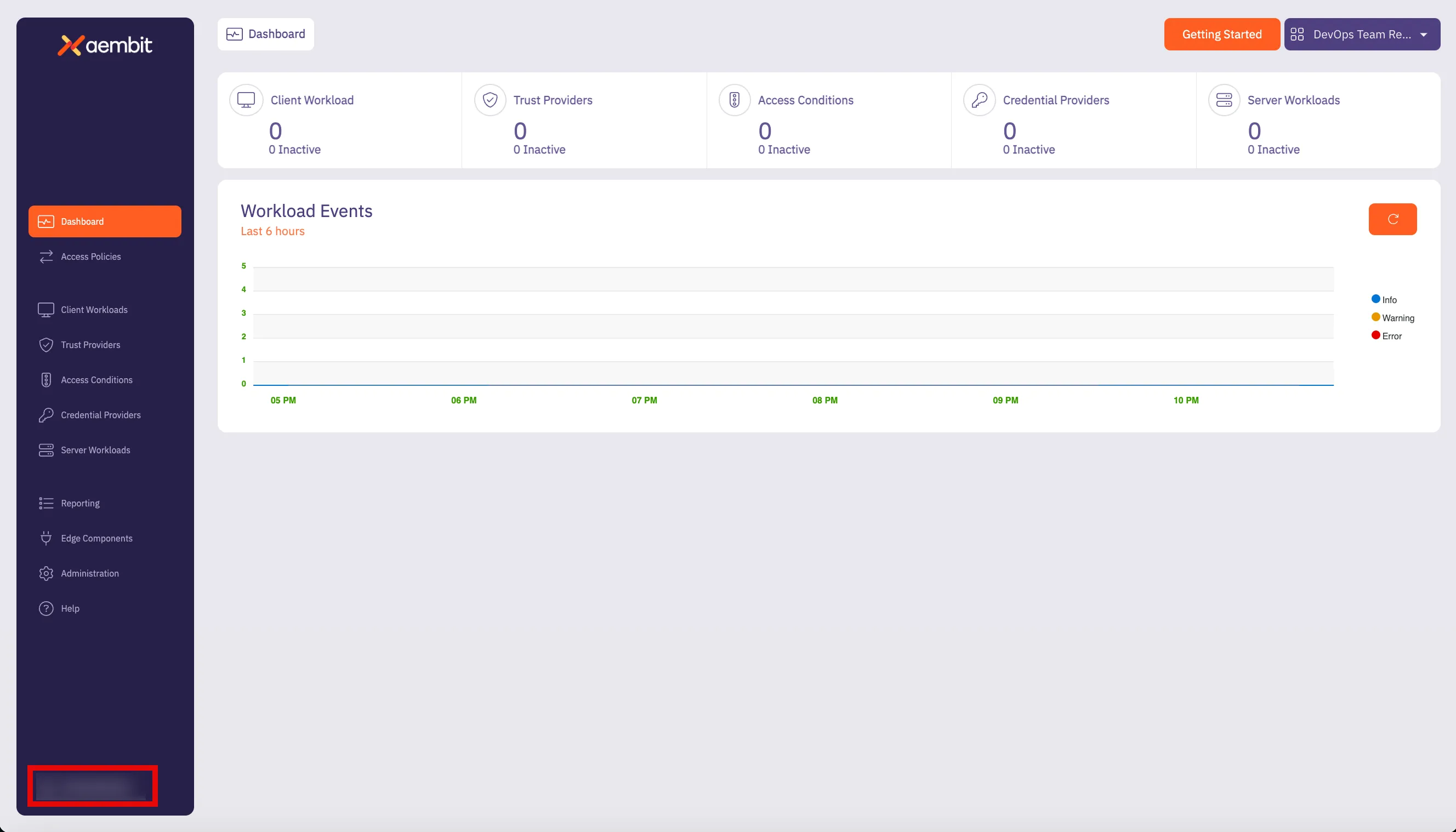

1. Log into your tenant. The Dashboard page appears.

2. In the bottom-left corner of the Dashboard page, hover over your name. Notice that a **Profile** link appears.

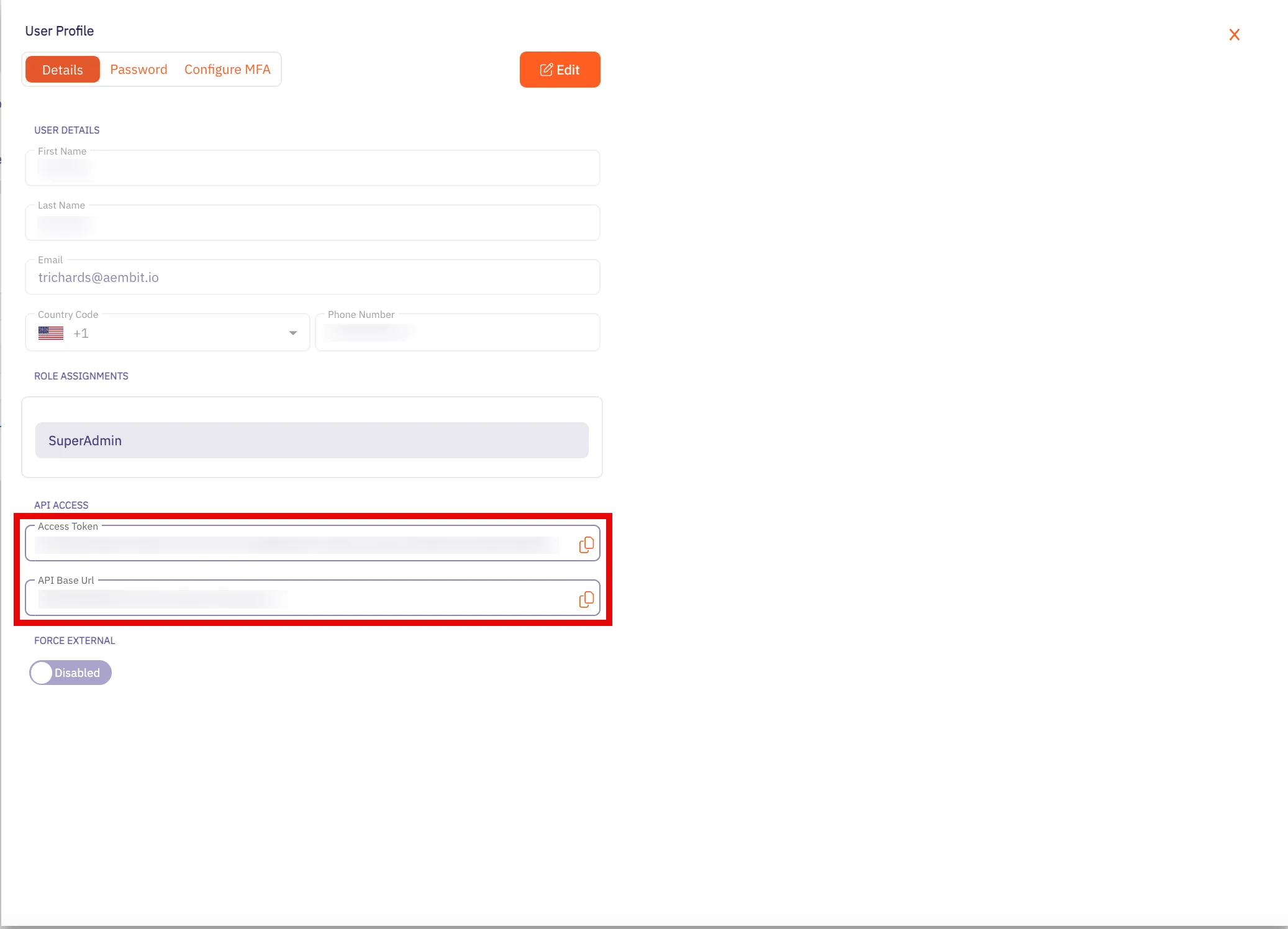

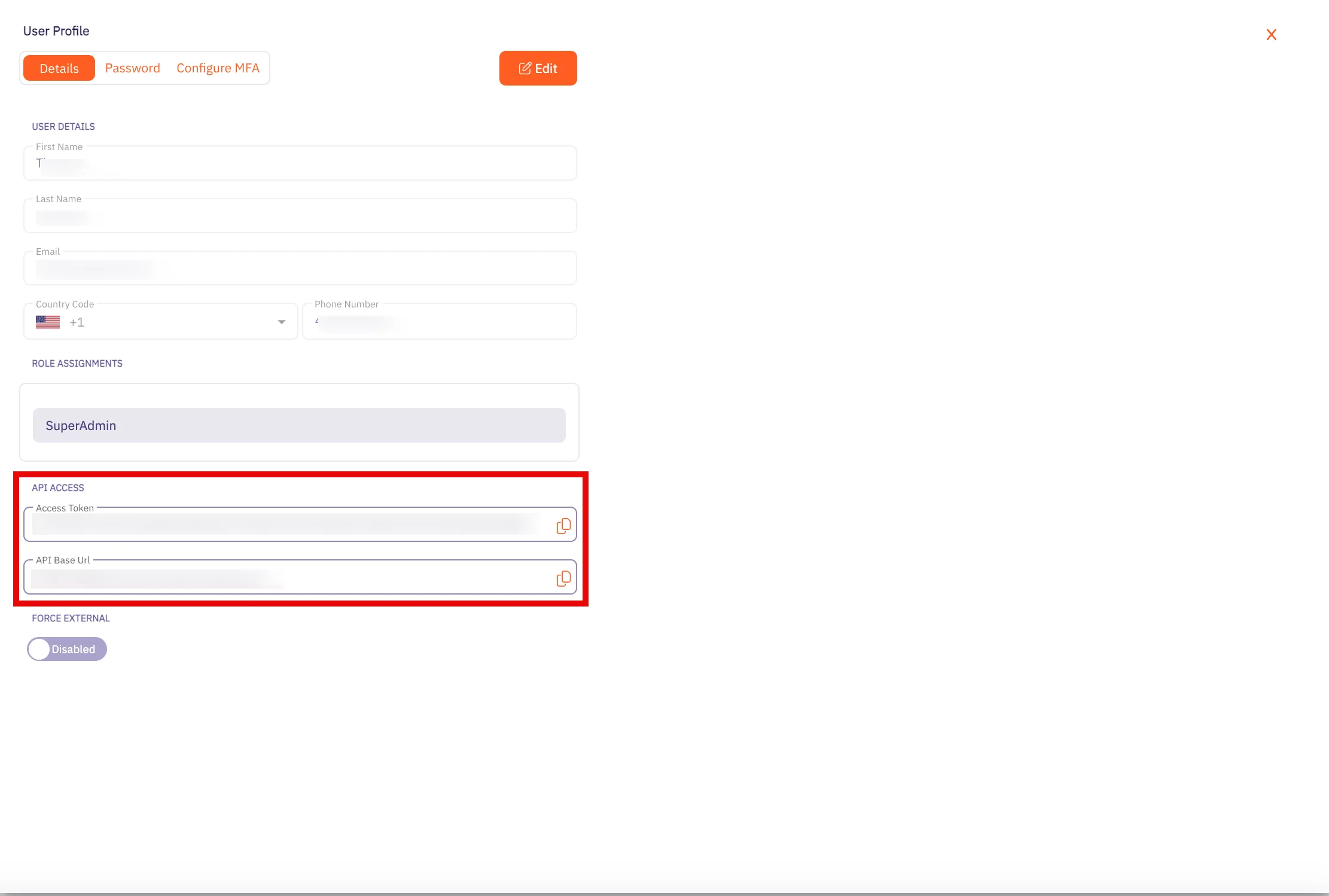

3. Click on the **Profile** link. The User Profile dialog window appears.

4. In the API Access section of the dialog window, copy the values in the **Access Token** and **API Base Url** fields.

You may use the **Access Token** and **API Base Url** values in your API requests, as shown below in the [REST API Request Structure](#rest-api-request-structure) section.

### Using Aembit Native Authentication

[Section titled “Using Aembit Native Authentication”](#using-aembit-native-authentication)

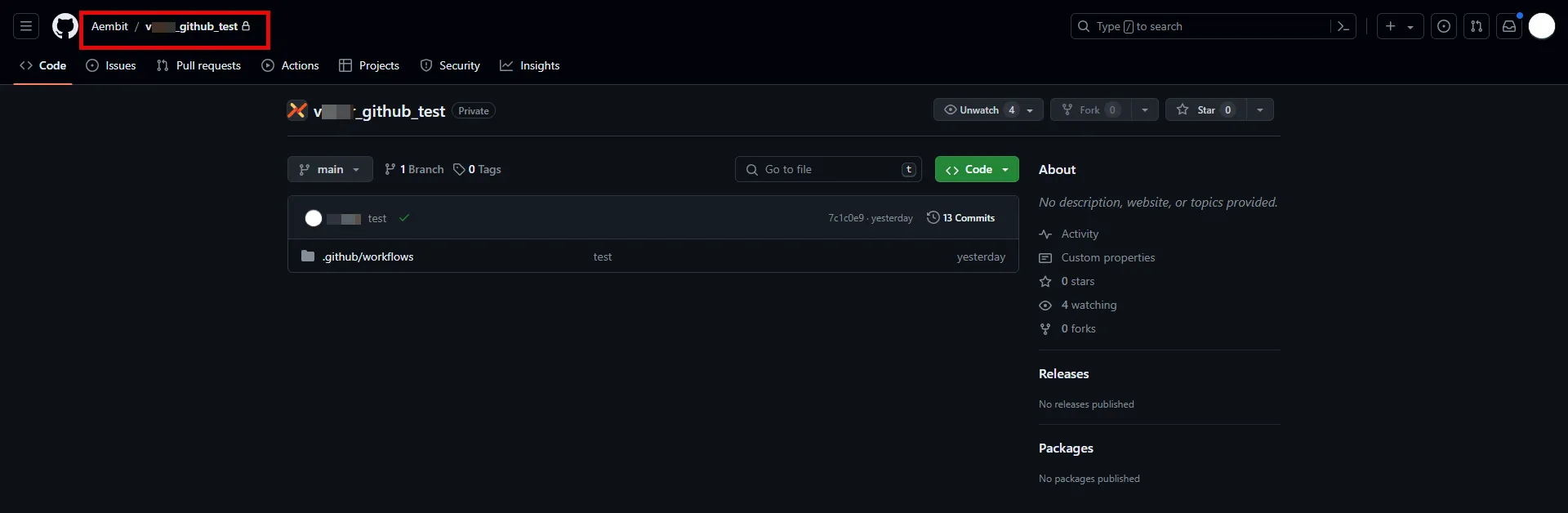

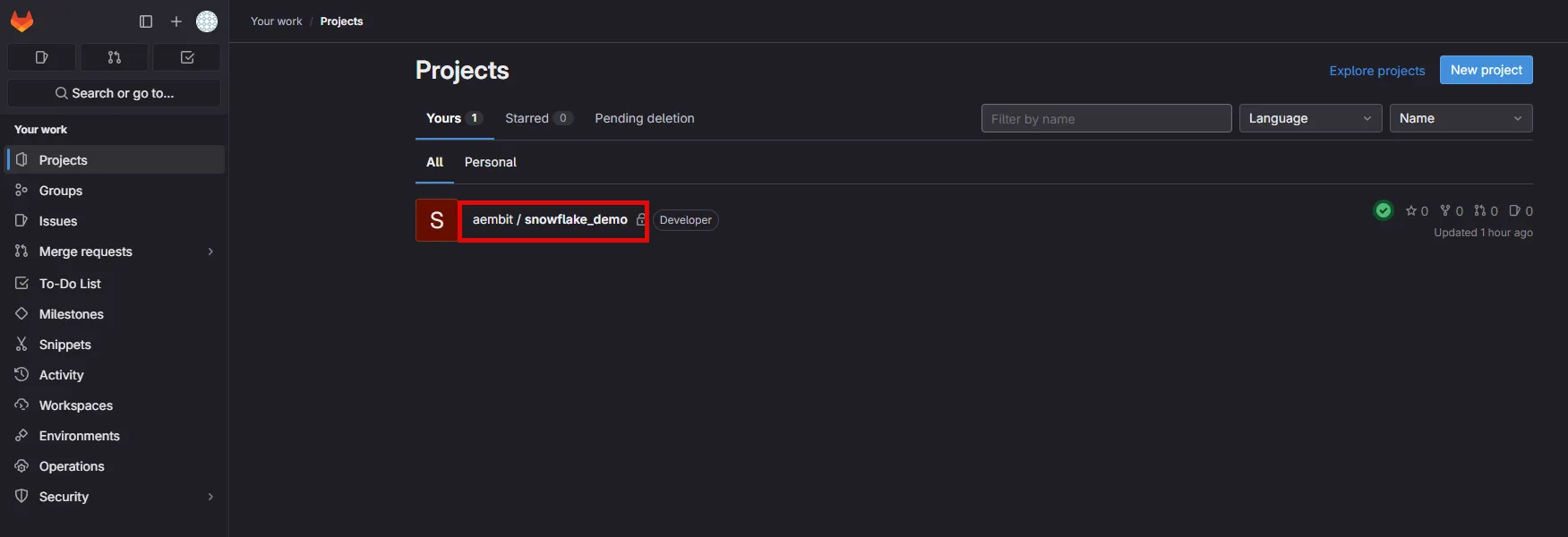

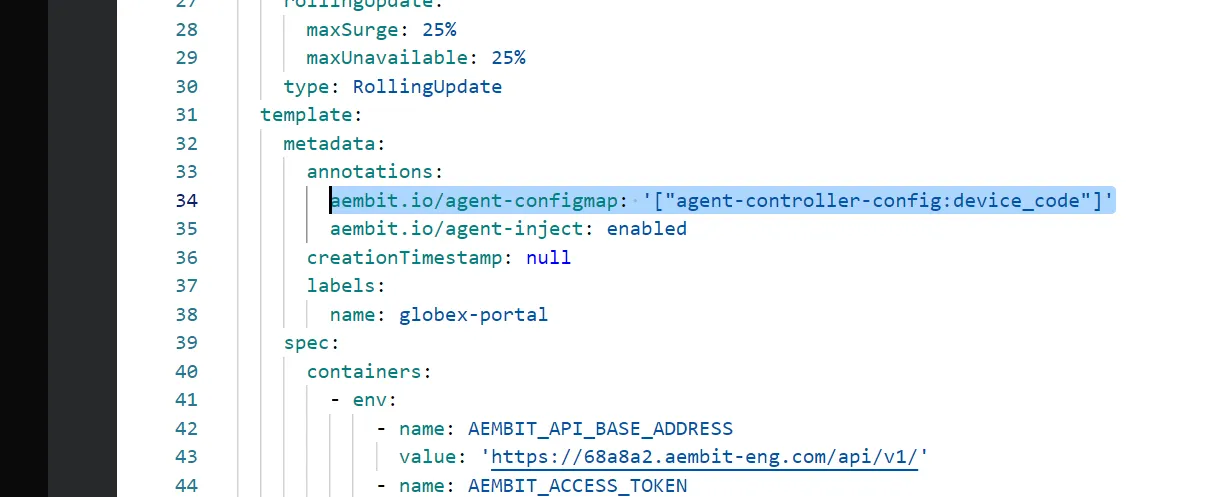

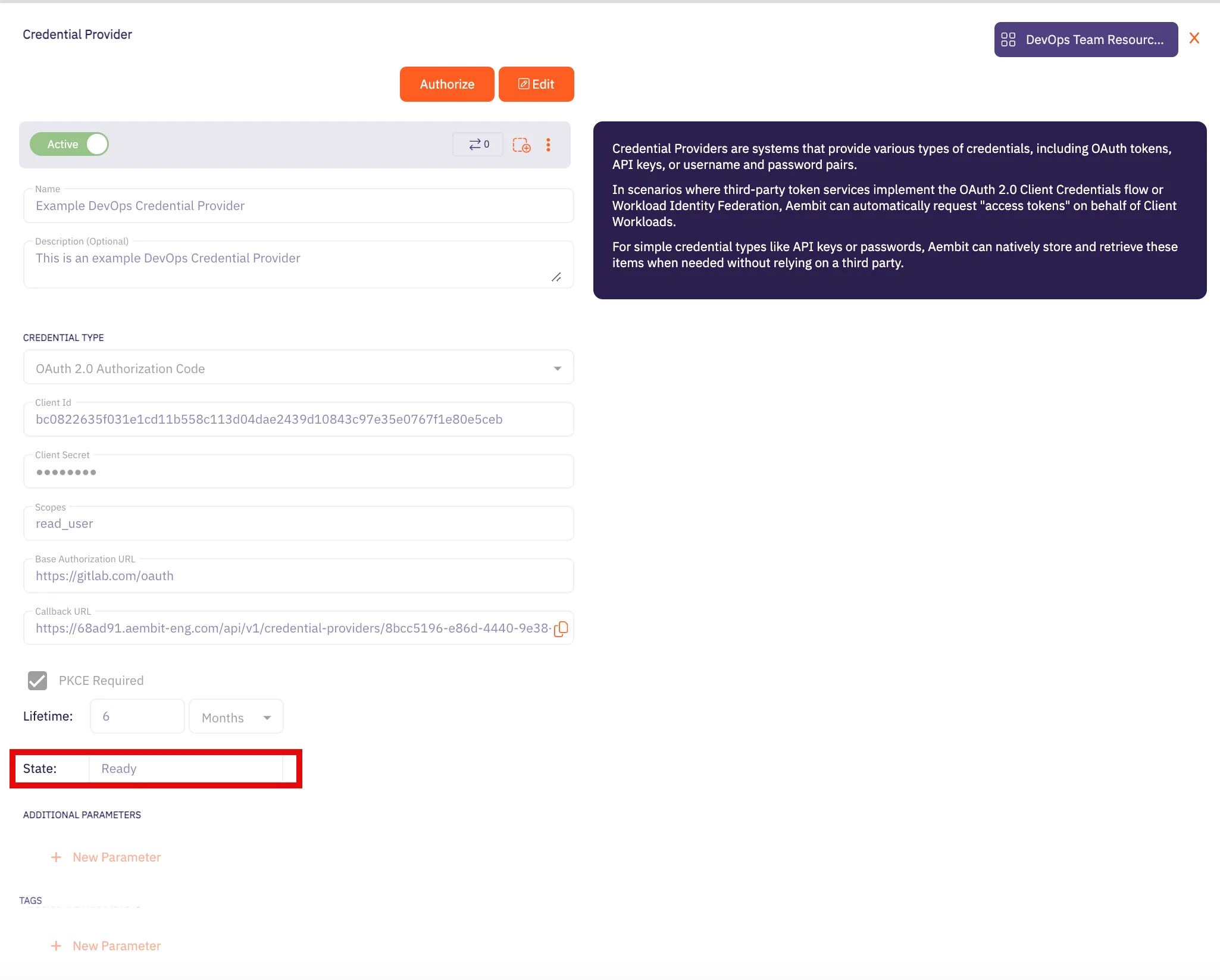

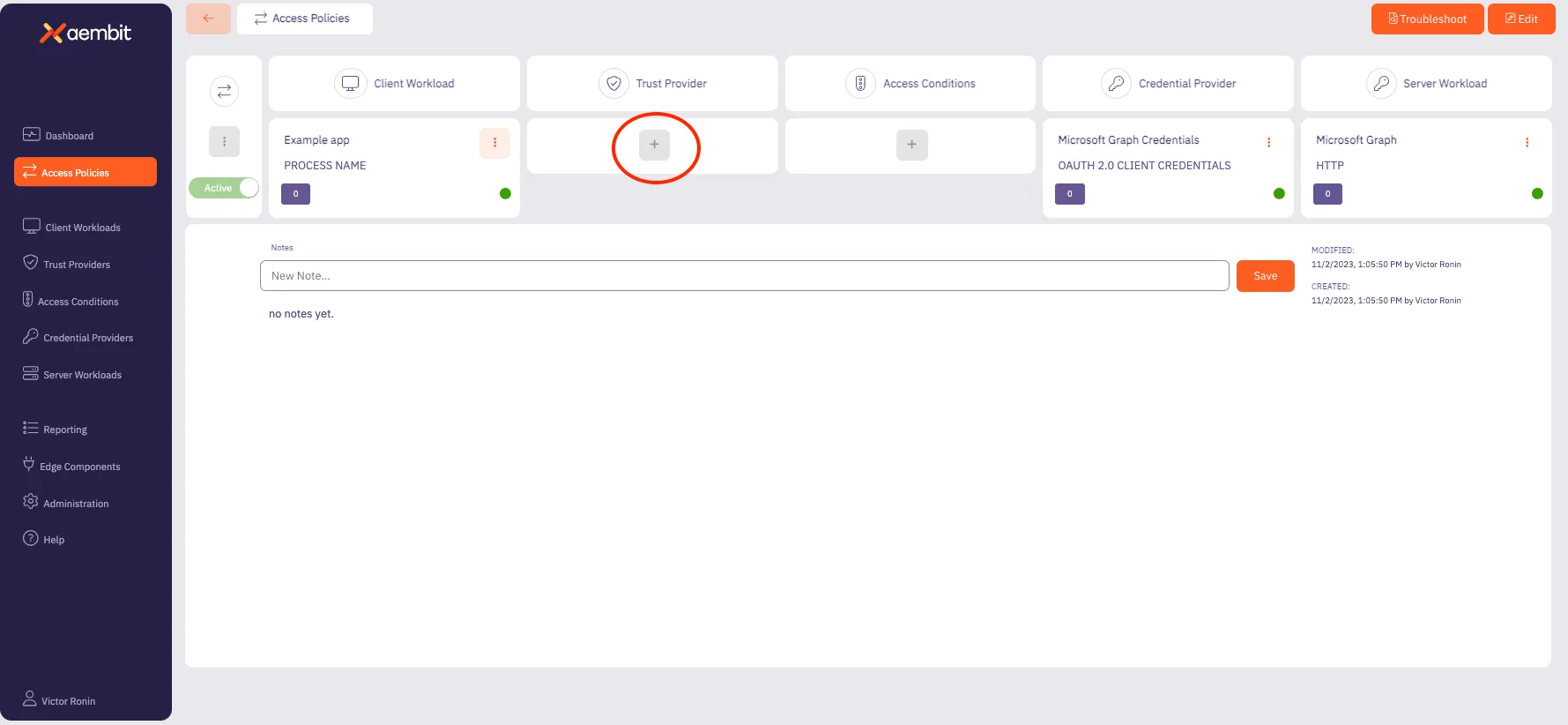

Aembit also supports authentication to the Aembit API using a native authentication capability which utilizes OIDC (Open ID Connect tokens) ID Tokens. This capability requires configuring your Aembit Tenant with the appropriate components as follows:

* Client Workload

* For the source of your API requests (e.g. GitHub Actions, GitLab Jobs, etc.)

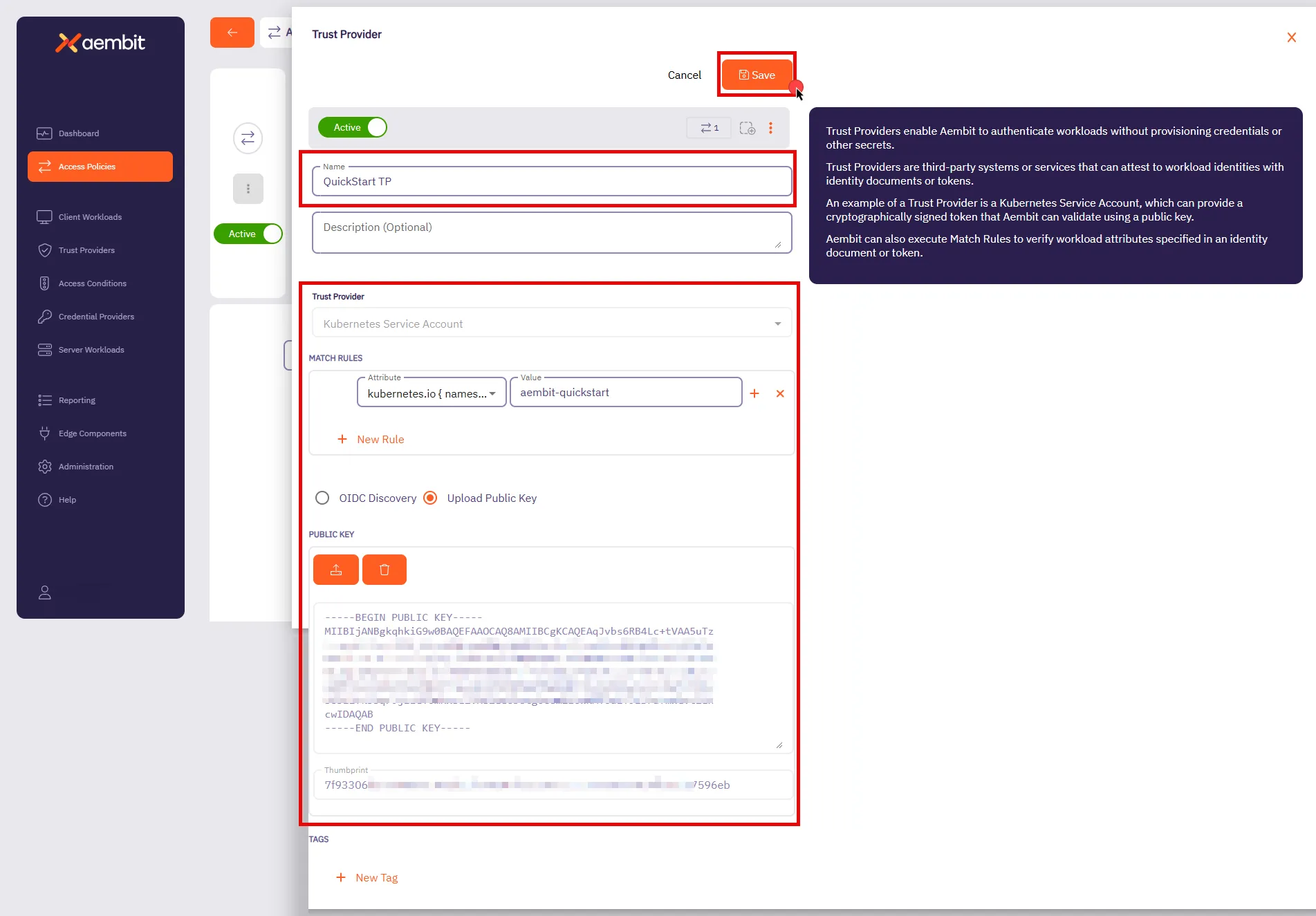

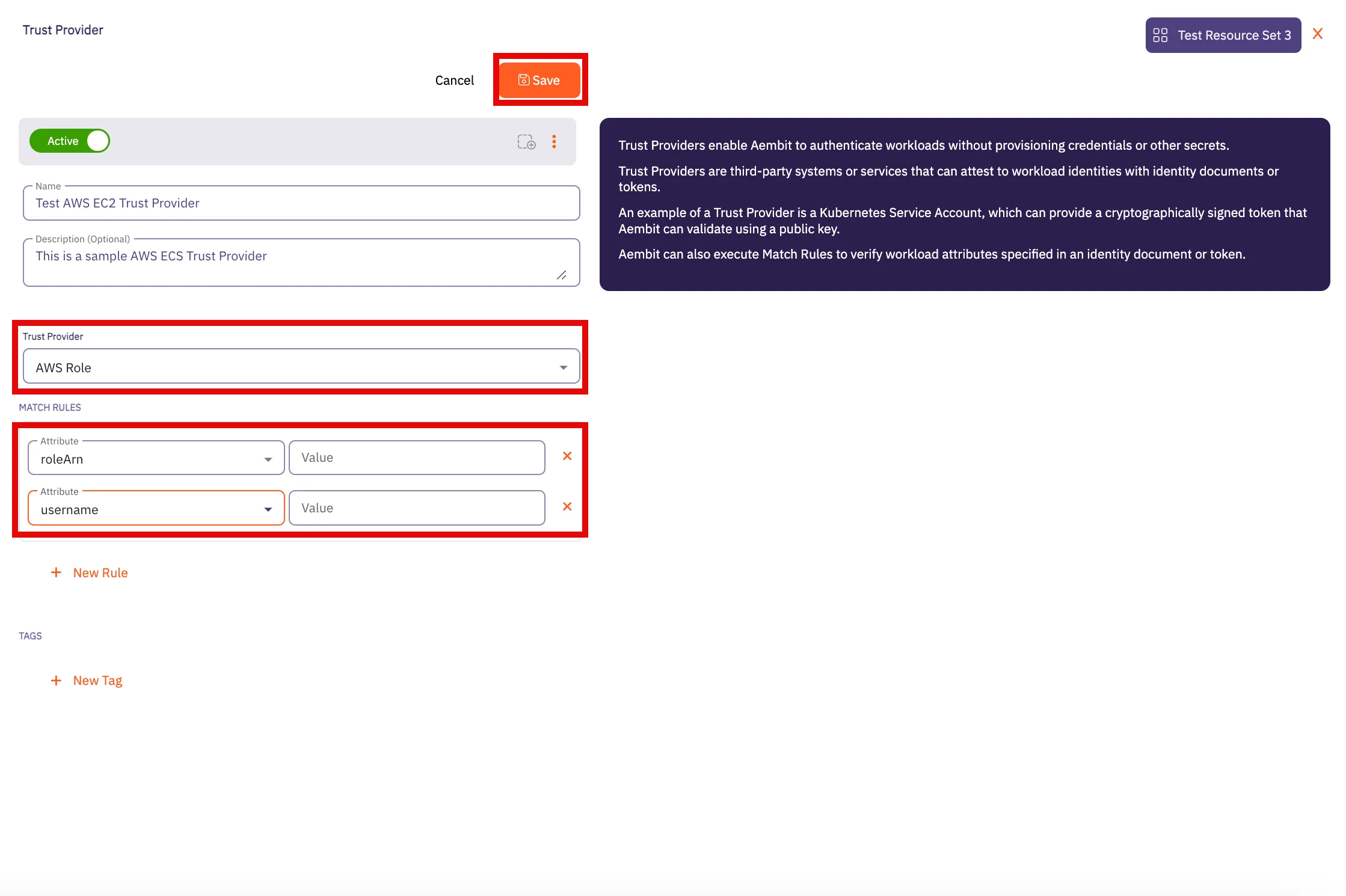

* Trust Provider

* To authenticate using cryptographic verification

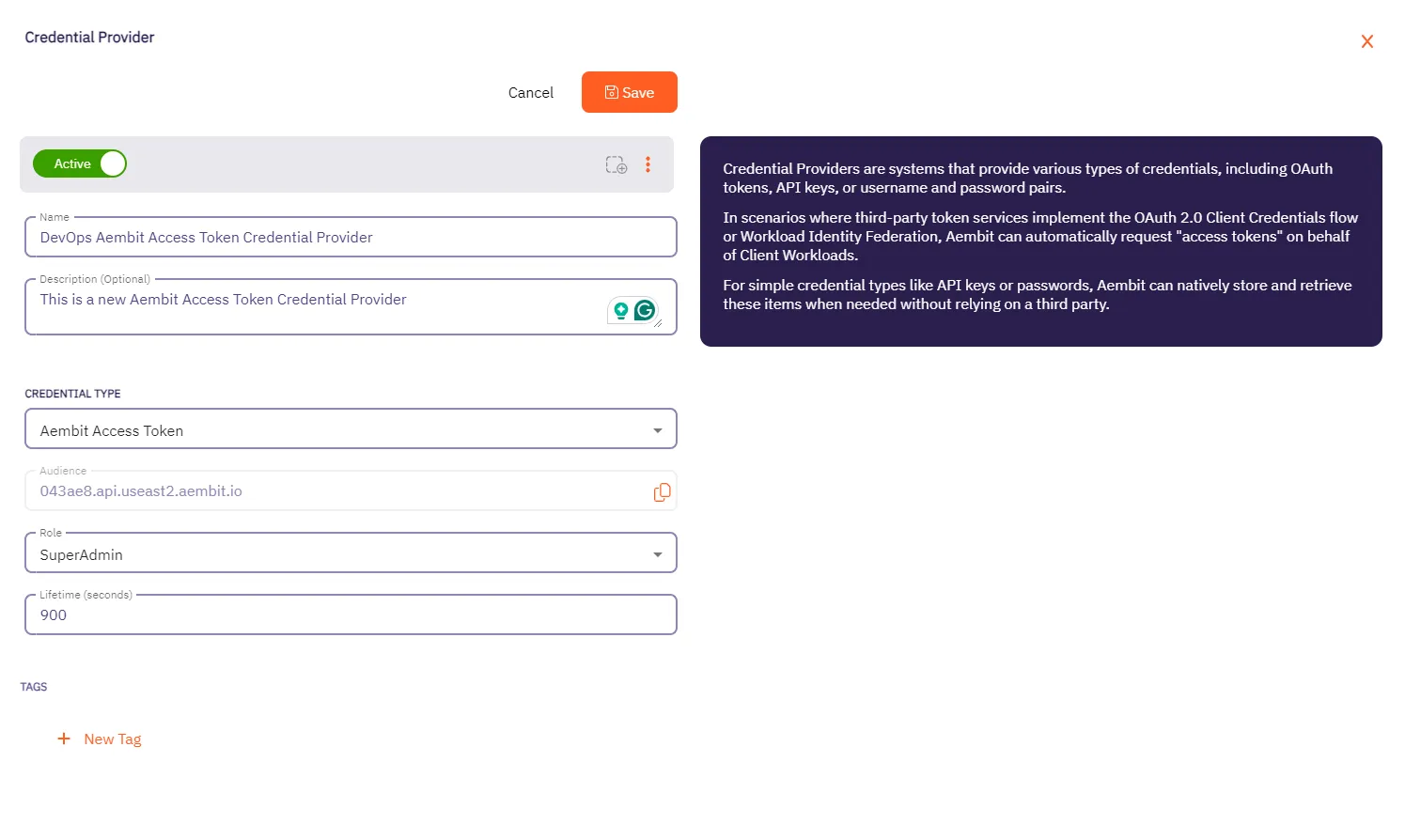

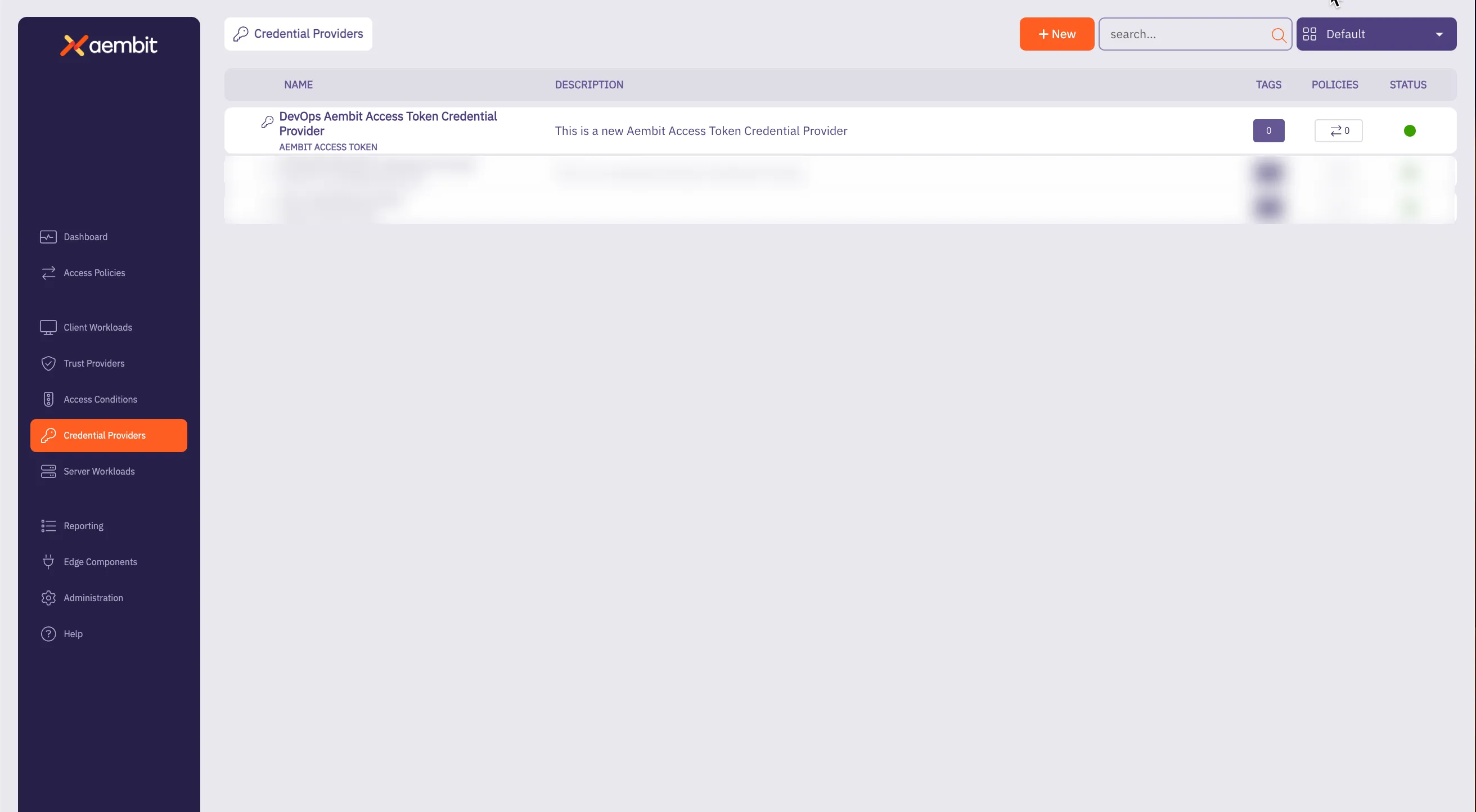

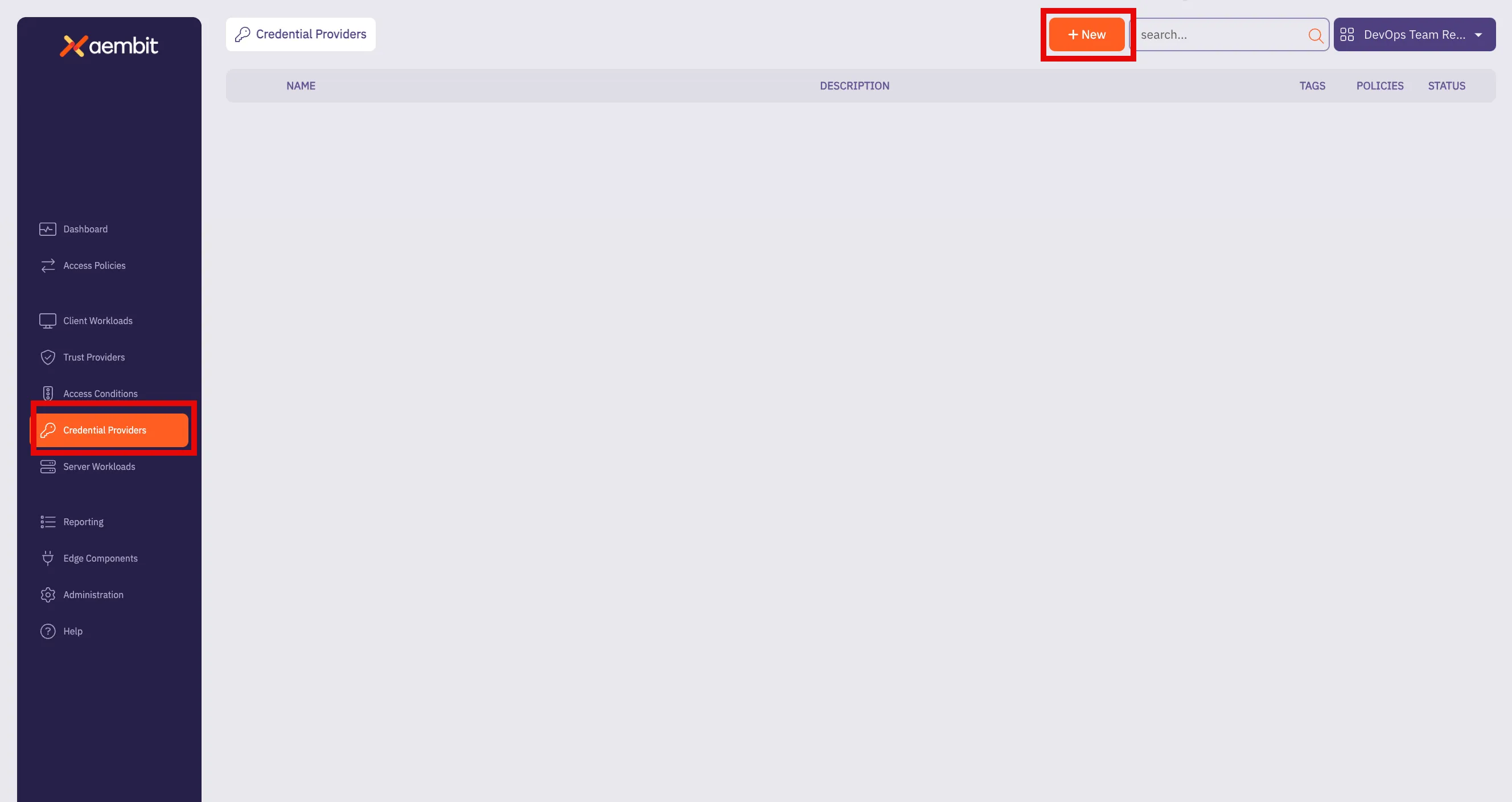

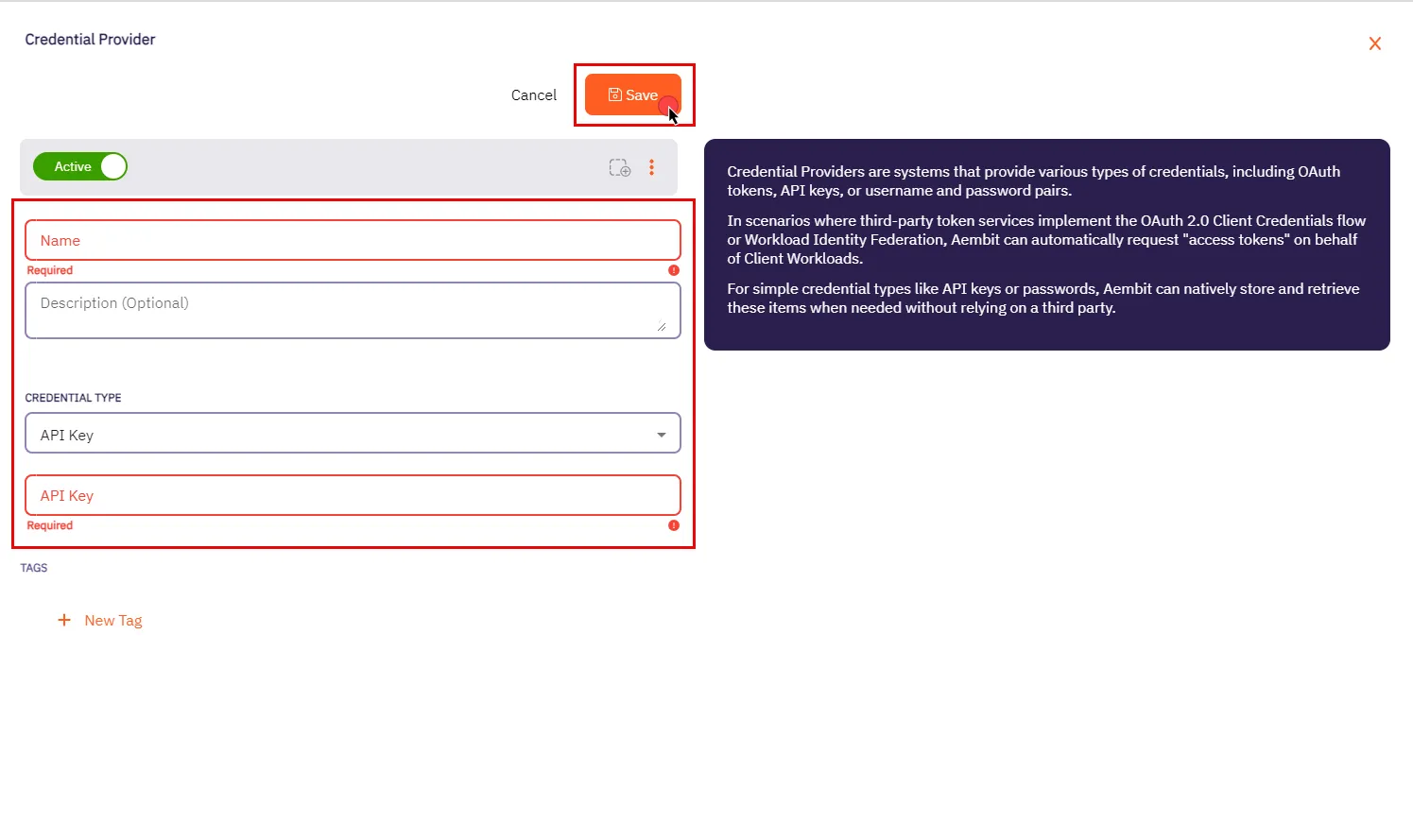

* Credential Provider

* Using the Aembit Access Token type and a Role with permissions to the appropriate entities

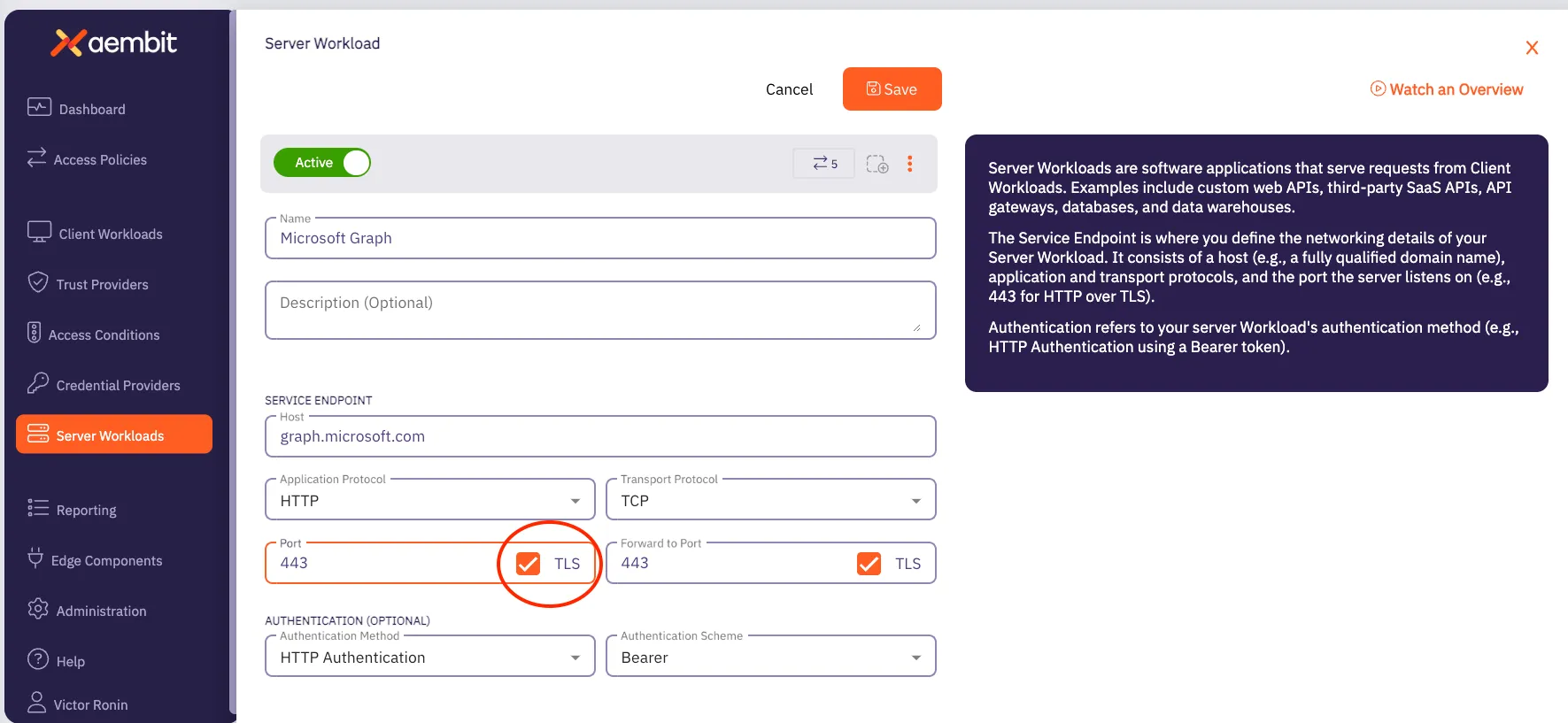

* Server Workload

* Referencing the tenant-specific Aembit API hostname

* Access Policy

* Using the access entities above enables the configured Client Workload access to the Aembit API

## REST API Request Structure

[Section titled “REST API Request Structure”](#rest-api-request-structure)

Making a REST API call to a server requires adhering to strict syntax and formatting guidelines for the server to process the request correctly. REST APIs rely on clients and users following prescribed URL structures and HTTP methods to ensure proper request handling and response generation. While there’s flexibility in data formats, the core structure of a REST API request remains consistent to perform specific actions and tasks in a single request.

The structure of a REST API call typically consists of the following elements:

* HTTP Method (REST Verb)

* REST API Url

* Base Url

* Version

* Resource

* HTTP Authorization Header Access Token

### HTTP Method (REST Verb)

[Section titled “HTTP Method (REST Verb)”](#http-method-rest-verb)

When you want to make an API request, there are several types of requests (verbs) you can use, depending on what task you are trying to perform.

REST uses the following verbs:

* **GET** - retrieves information from one more more resources

* **POST** - creates a new resource

* **PUT** - updates an existing resource

* **PATCH** - partially updates an existing resource

* **DELETE** - deletes a resource

### Base URL

[Section titled “Base URL”](#base-url)

The Base Url value is the standard HTTP address where requests are sent to the API server.

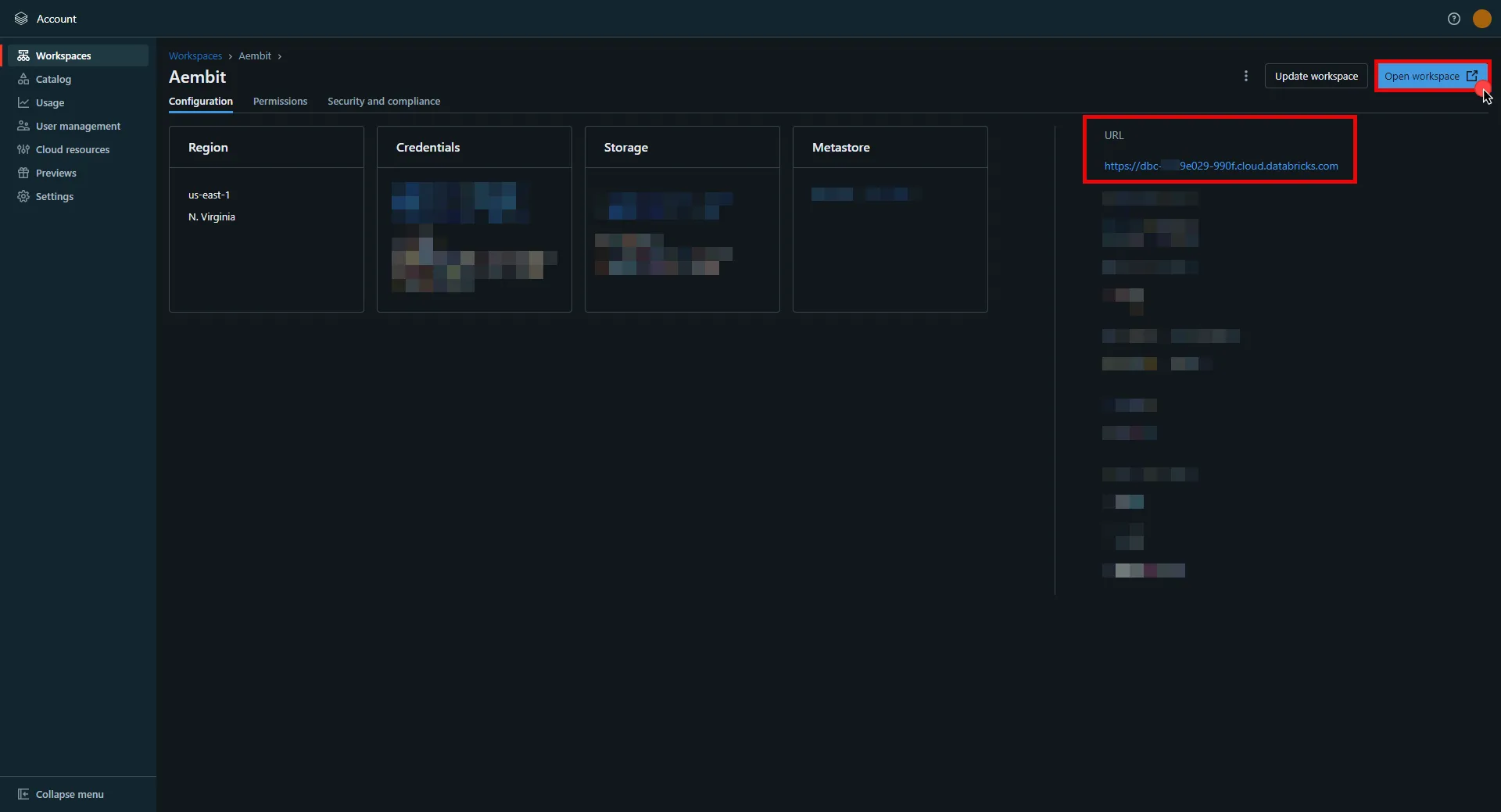

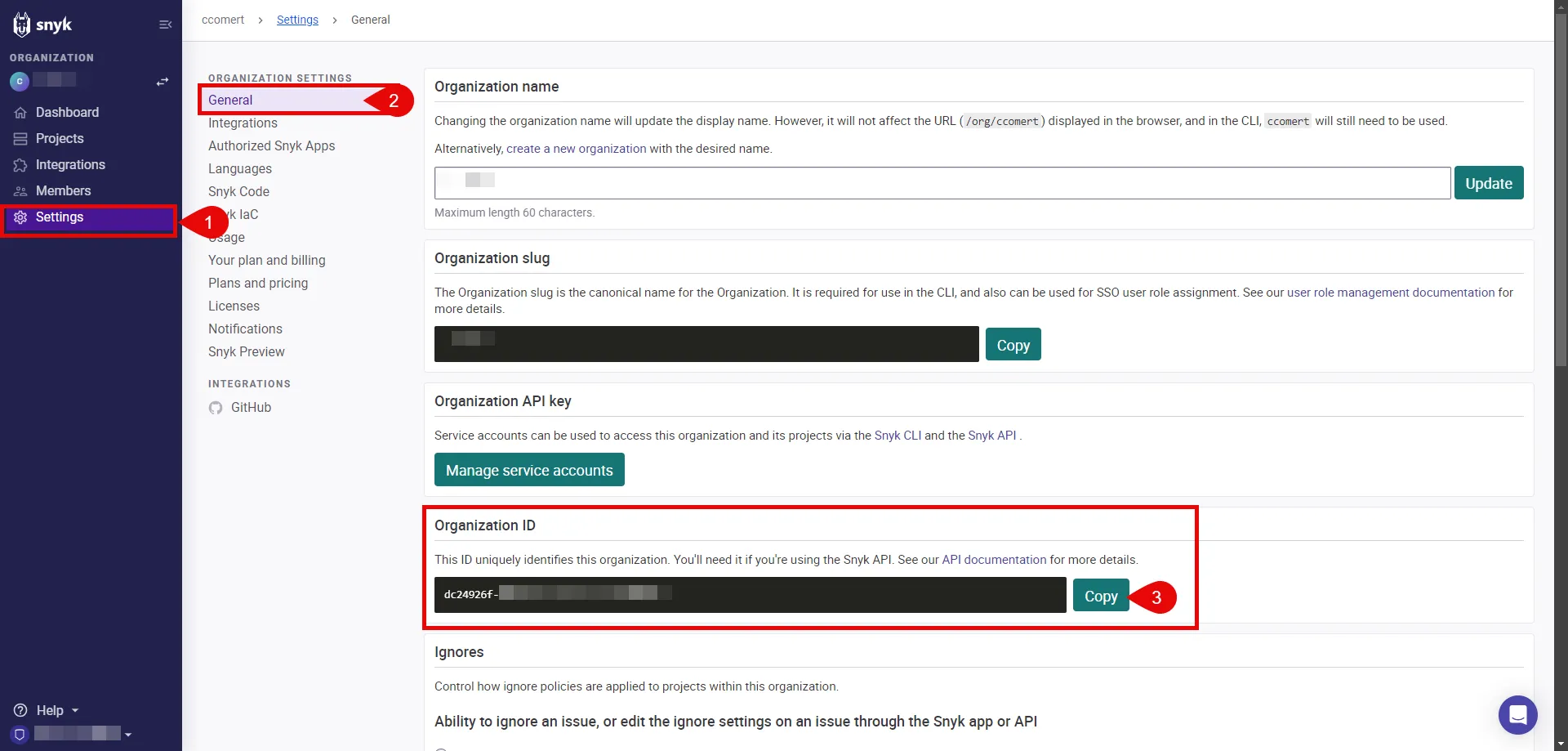

For the Aembit API, this will use be the **API Base Url** from your Aembit Tenant and can be retrieved using the steps below:

1. Log into your Aembit Tenant.

2. On the main Dashboard page, hover over your name in the bottom-left corner and click on **Profile**. A User Profile dialog window appears.

3. In the **API Access** section, copy the values in the **API Token** and **API Base Url** fields. You will need these values in your API client to make requests to the server.

### Versioning

[Section titled “Versioning”](#versioning)

Every public API, including the Aembit API, includes a **Version** that distinguishes it from other API versions. If an organization manages multiple versions of an API (e.g. v1, v2, v3, etc.), each version often includes different features and functions. Depending on the API version used in the request, you may have access to different features.

To make it easier for users to make calls to the correct API version, the version number is included the API request. So, for example, in the Aembit API, a request with the version number looks like the following example:

`/api/v1/users`

The `v1` in the URL specifies that the request is for the `v1` version of the Aembit API. By adding the version number in the URL, this tells the server that you want to access resources for that specific version of the API.

### Resource

[Section titled “Resource”](#resource)

The `resource` value is the specific resource being called. For example, `server-workloads` and `client-workloads` are resources.

### Access Token

[Section titled “Access Token”](#access-token)

The Access Token value is a unique identifier that can be included in the API request, enabling Aembit APIs to identify the requestor and verify the enabled API permissions.

Note

Aembit requires Authorization Header Bearer (access) tokens to be used when making API calls and does not support long lived credentials.

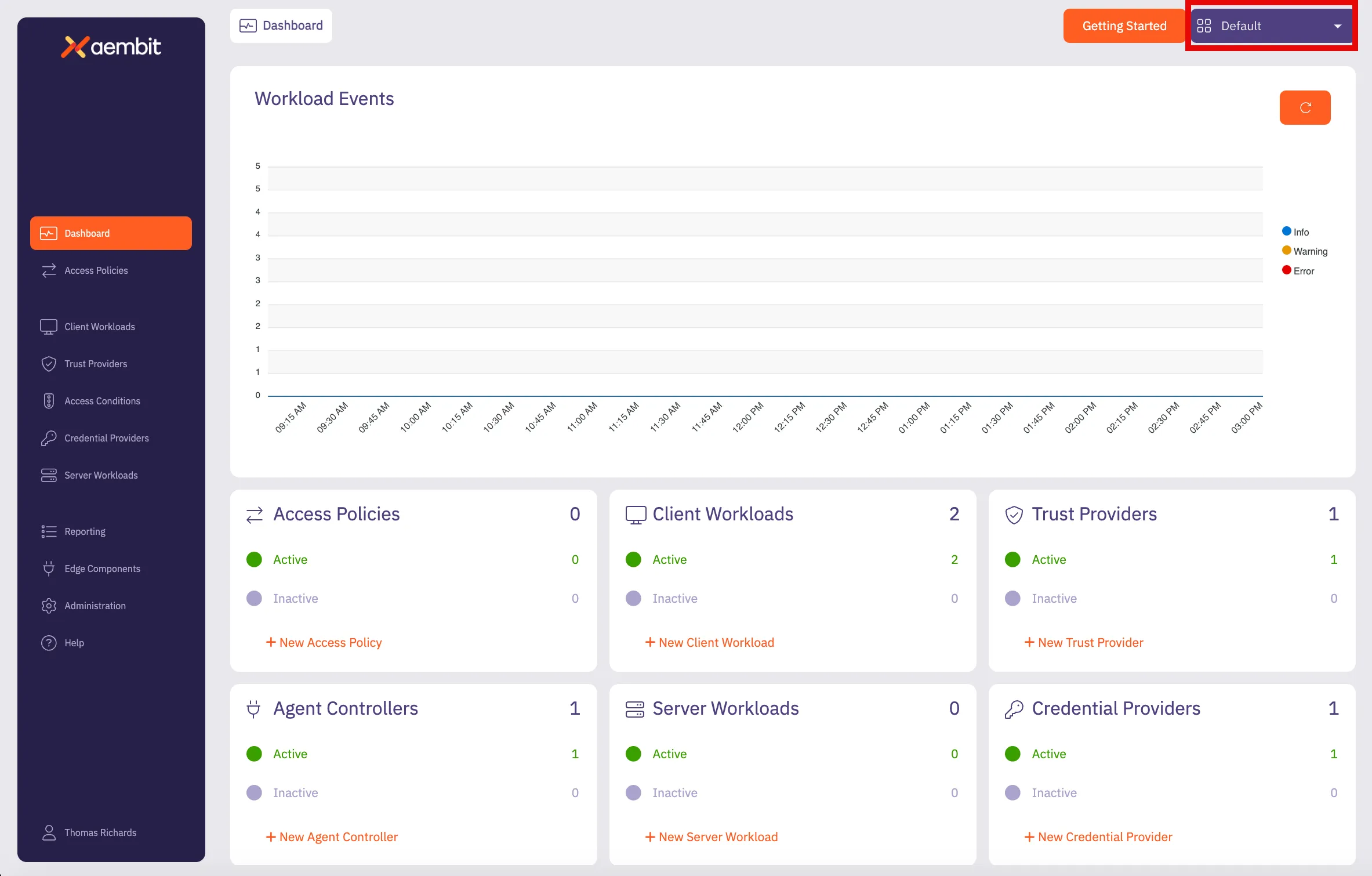

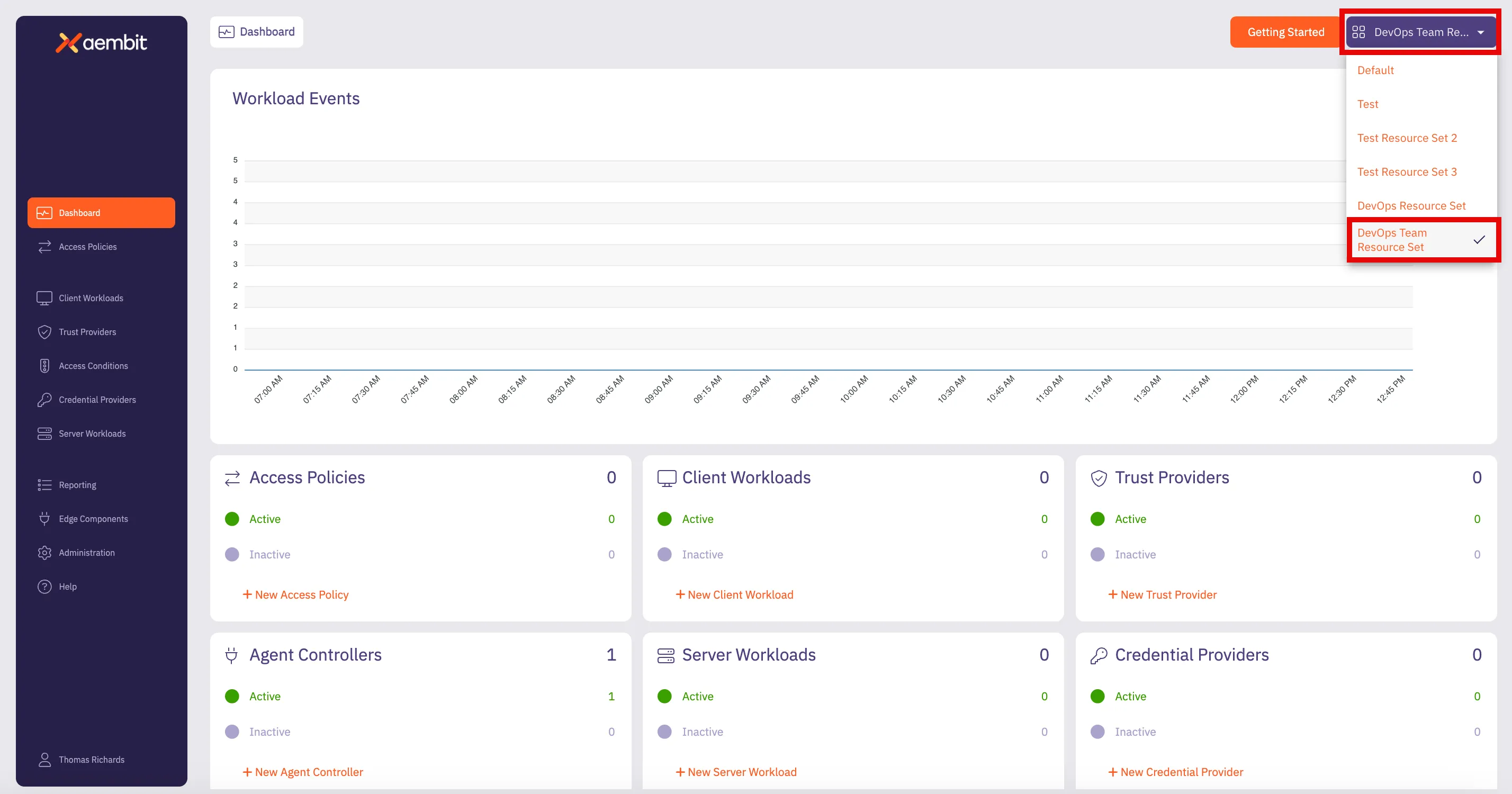

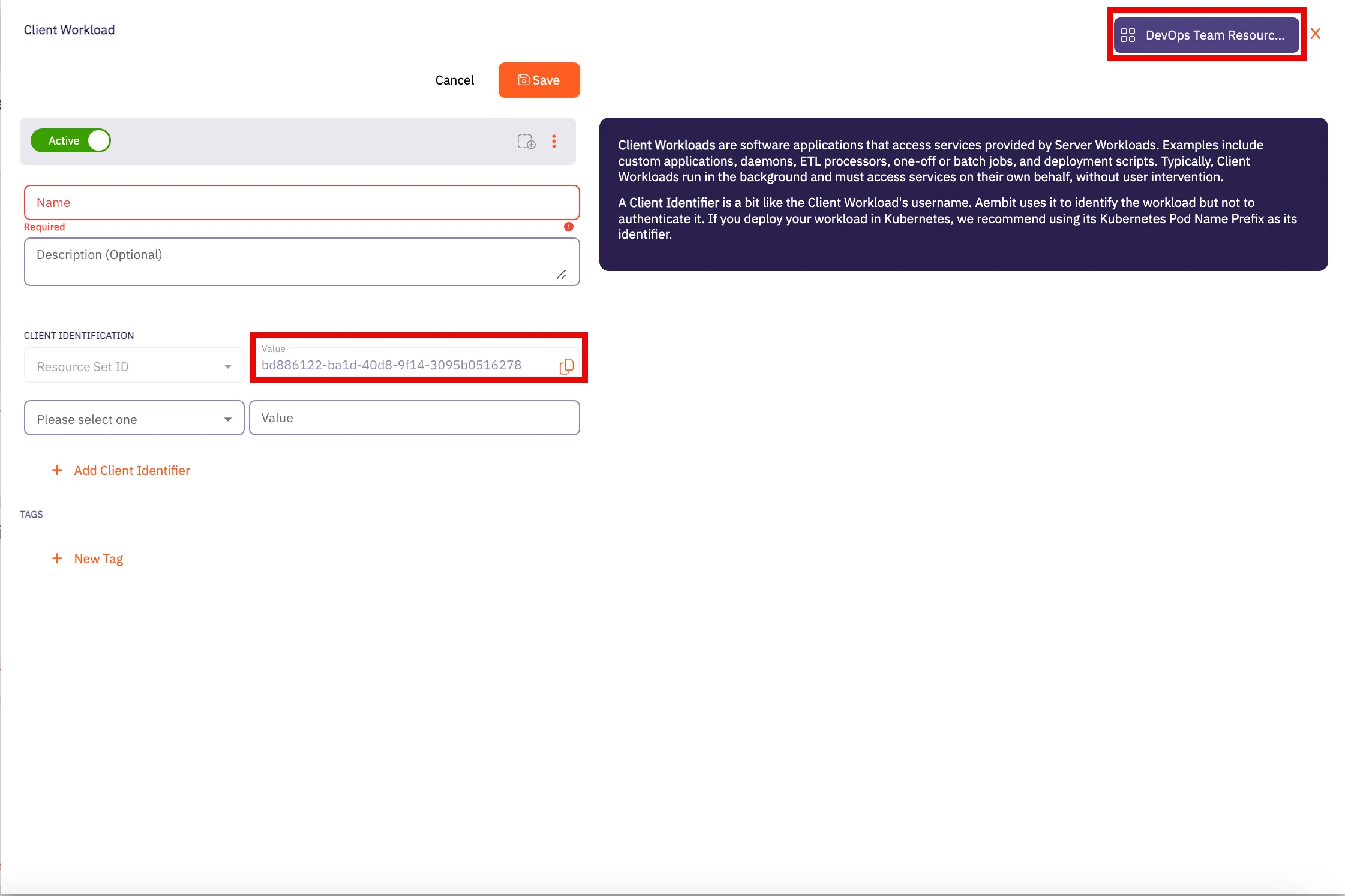

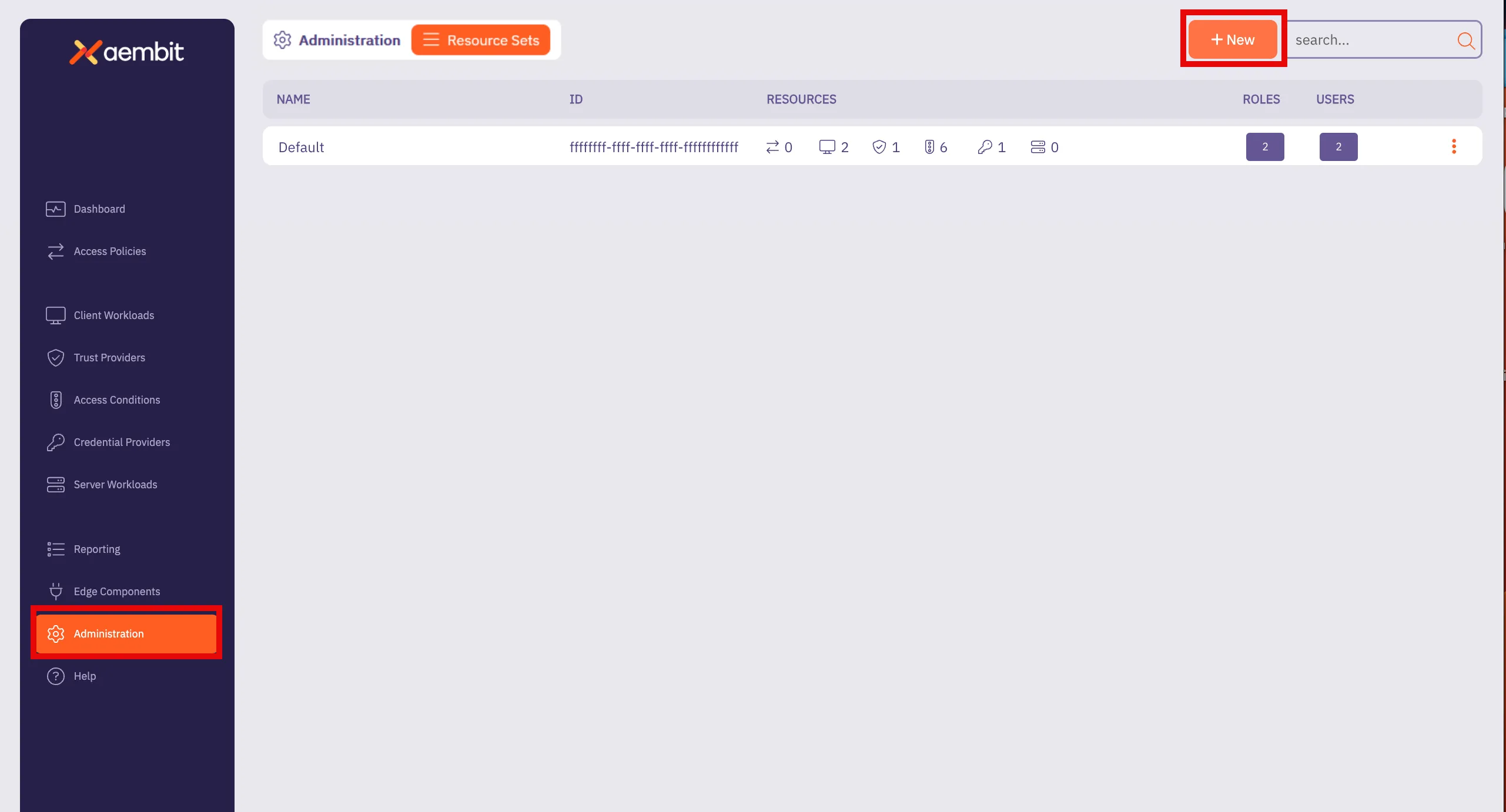

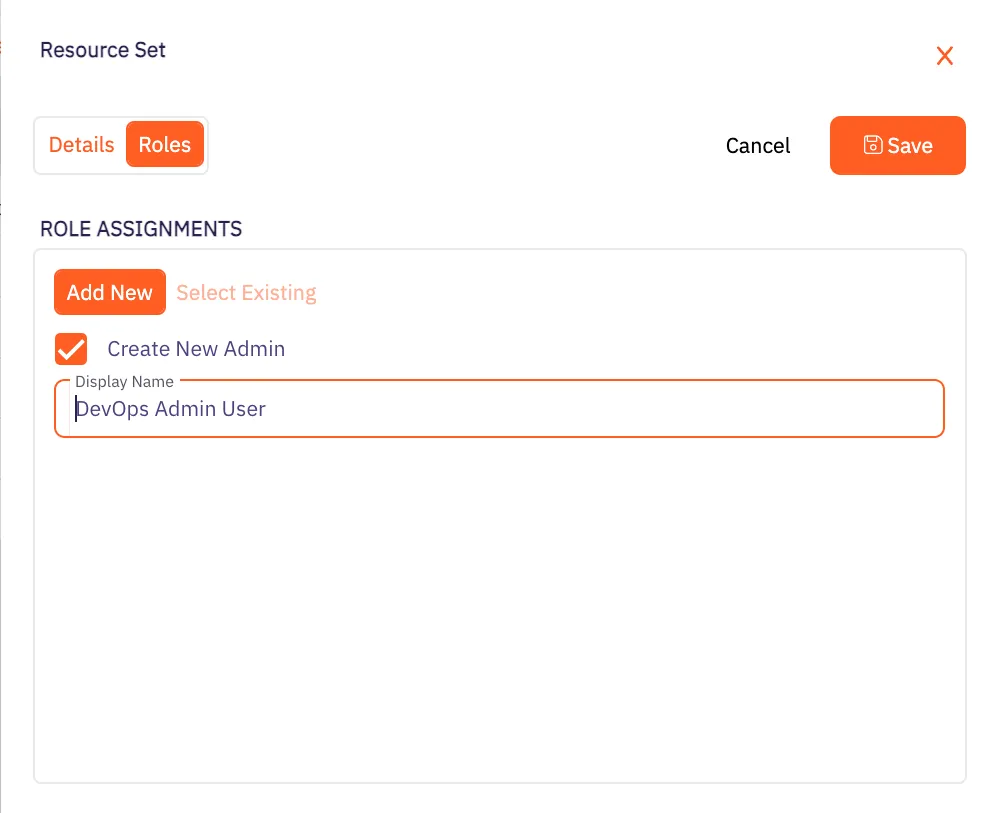

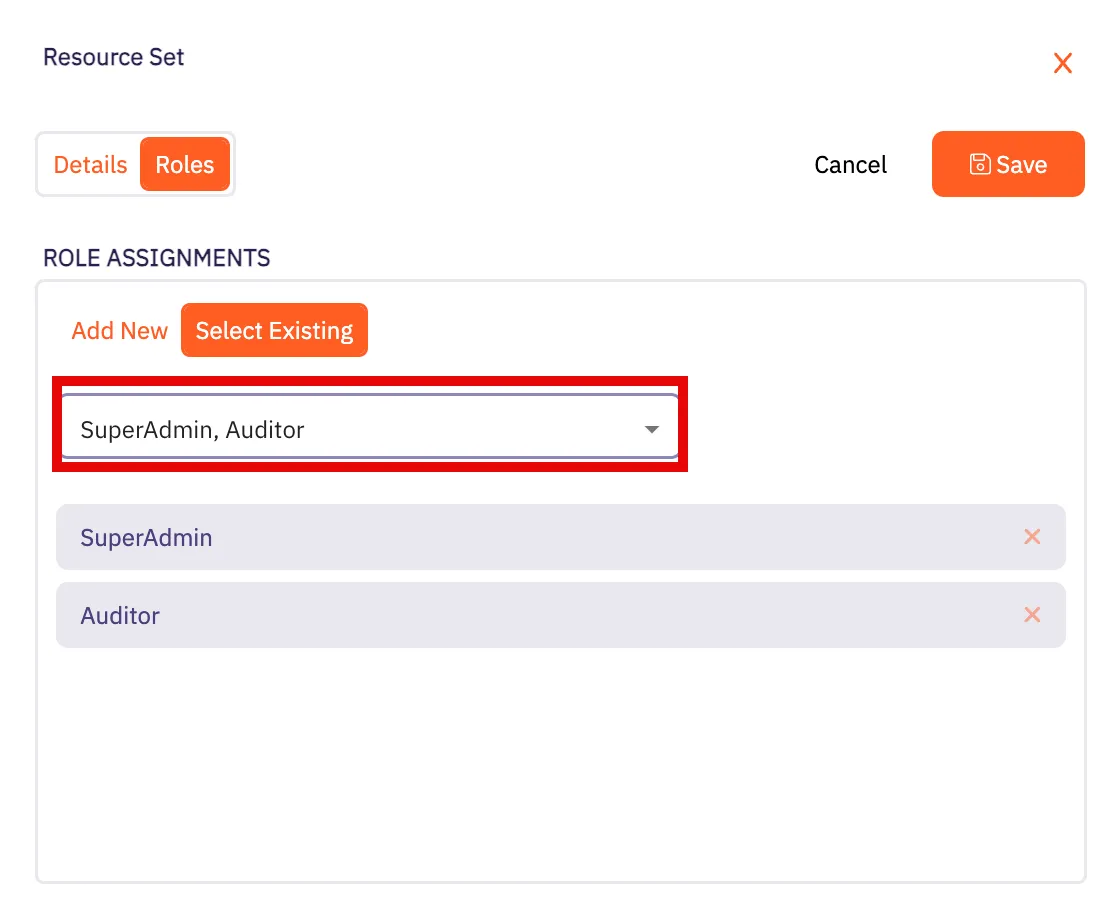

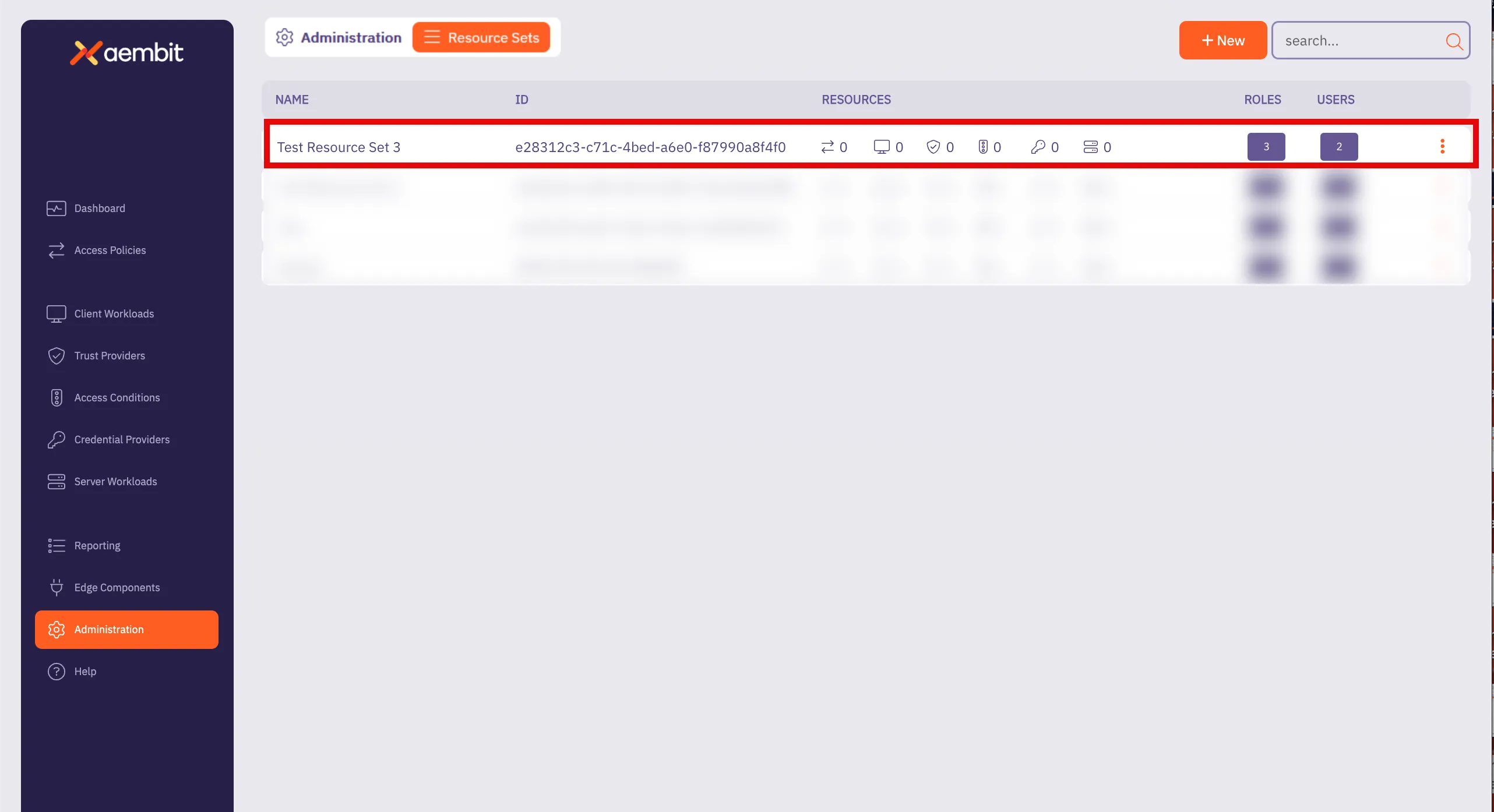

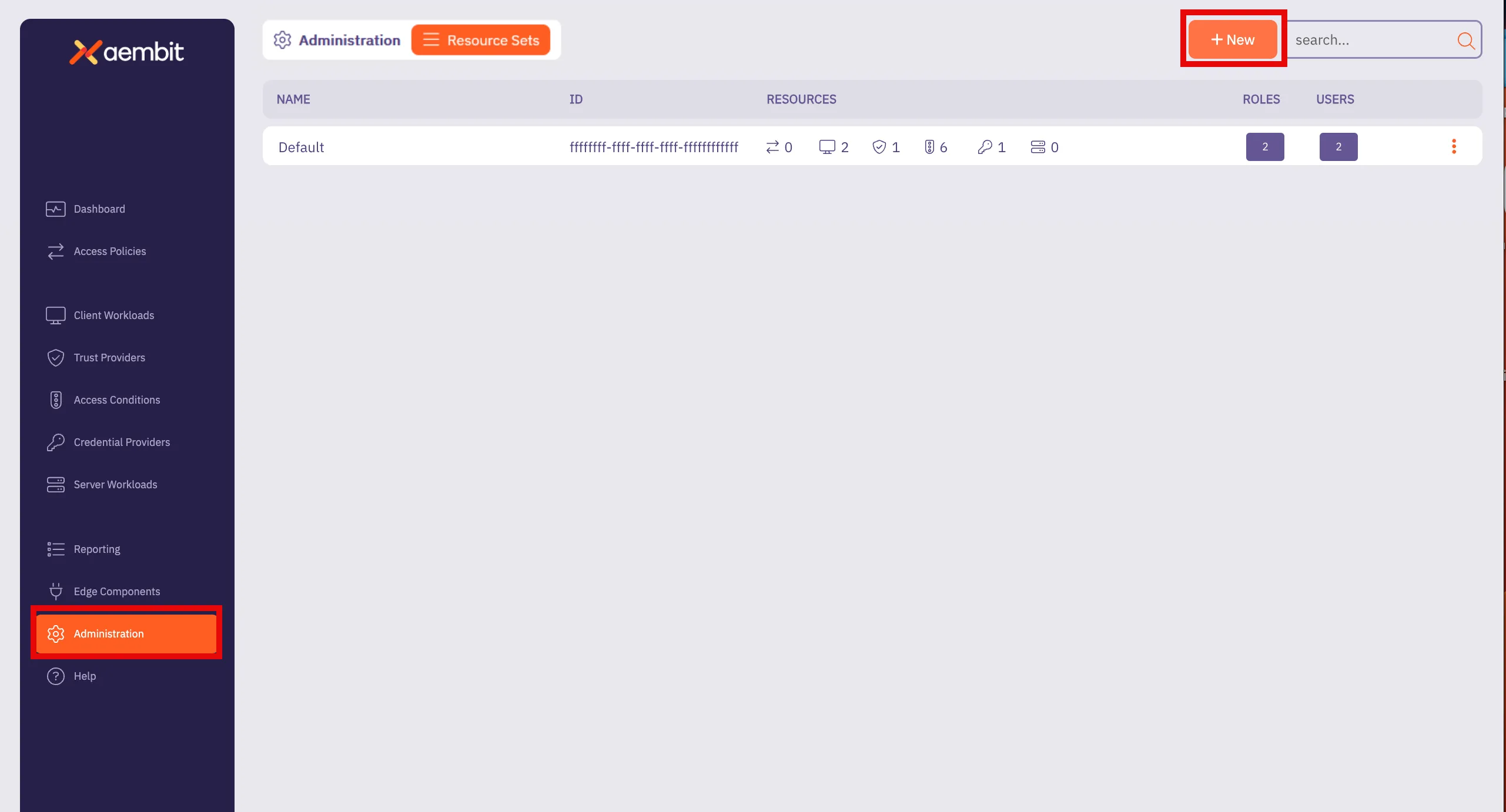

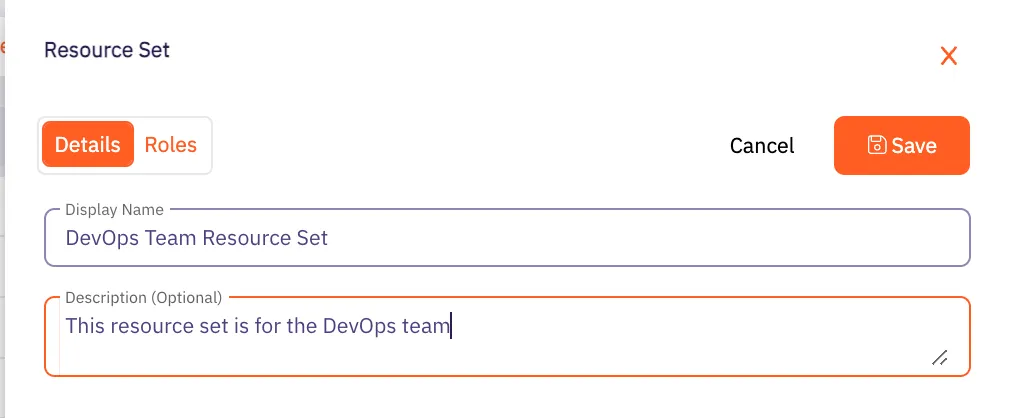

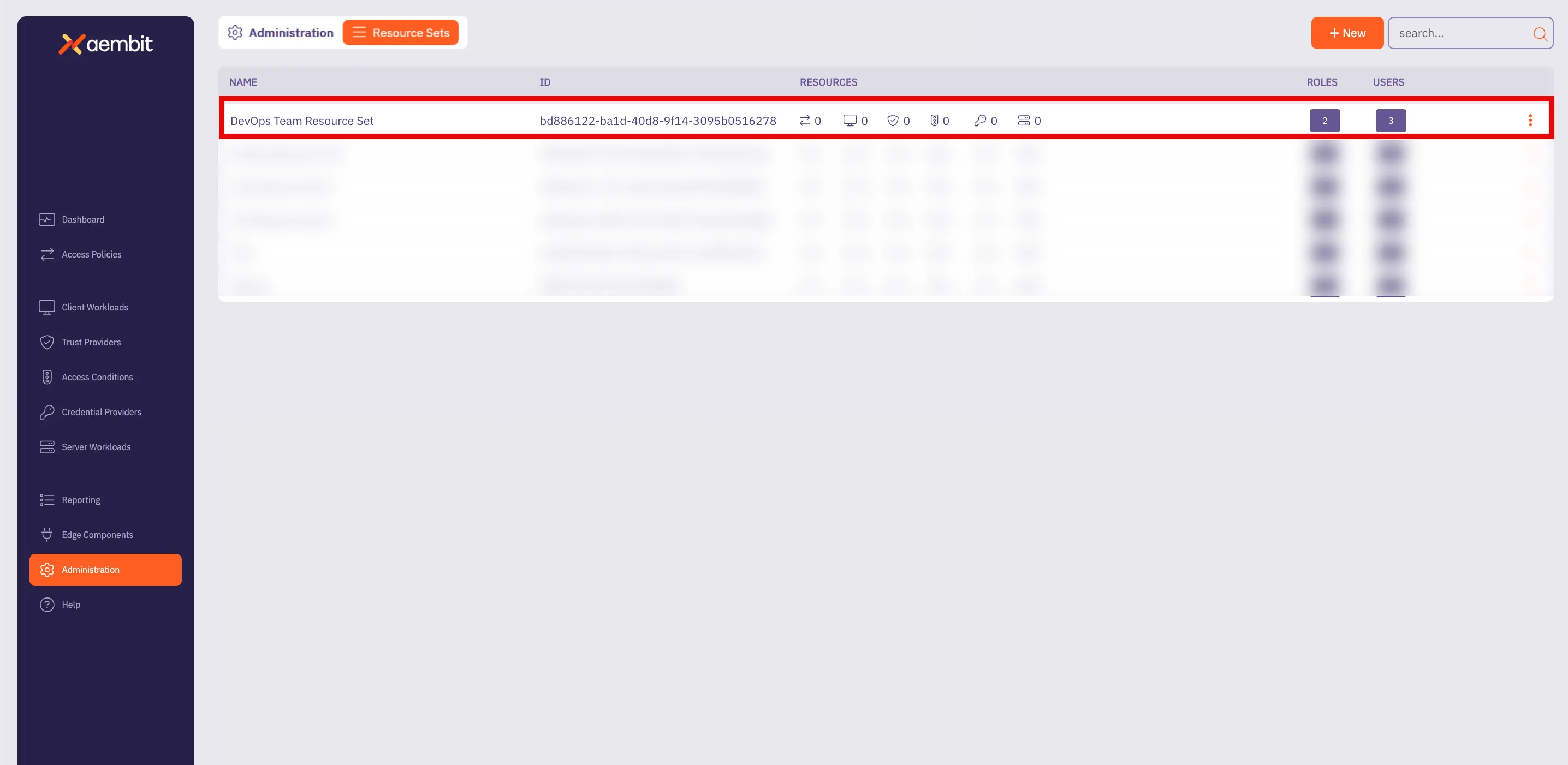

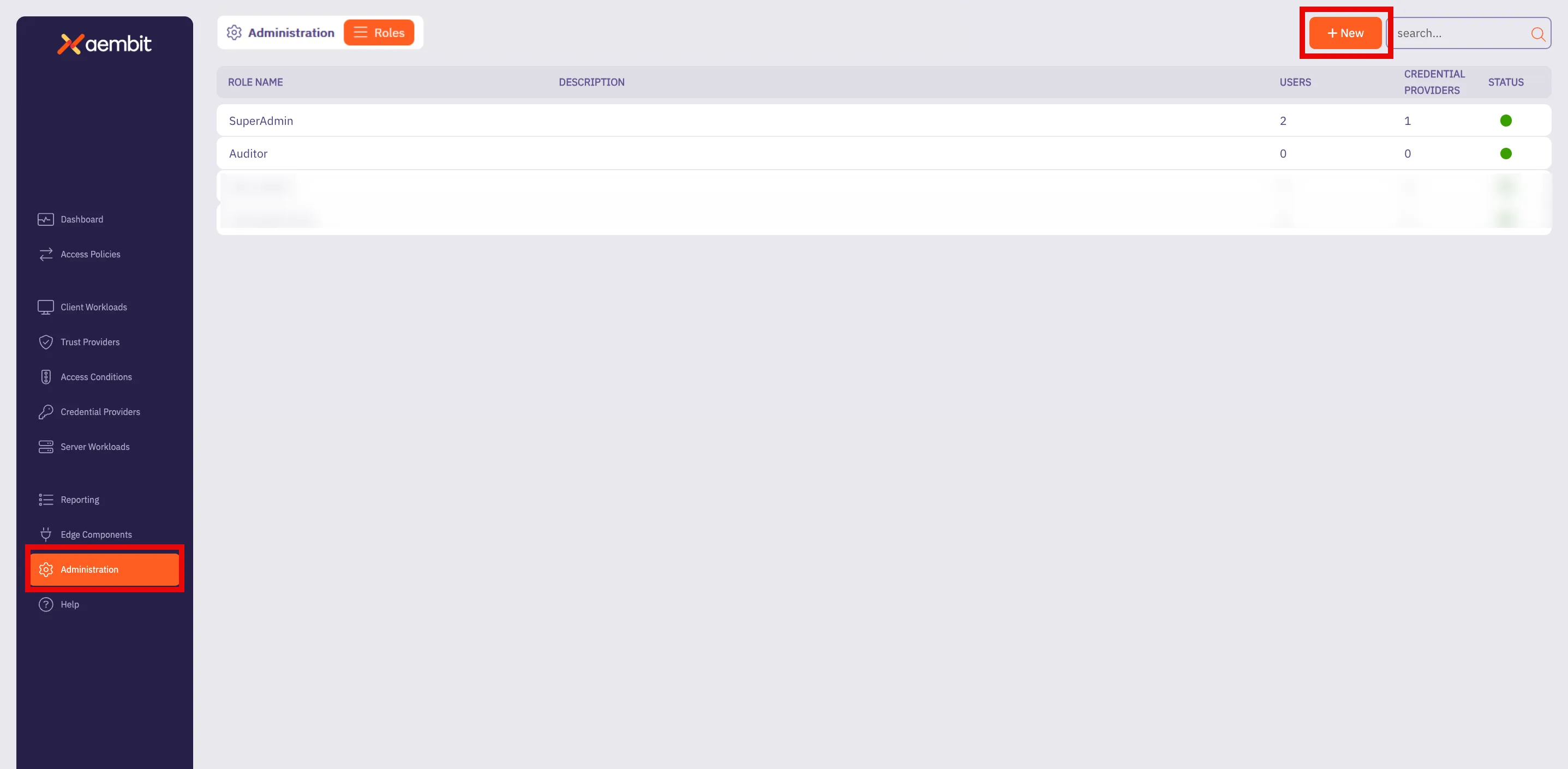

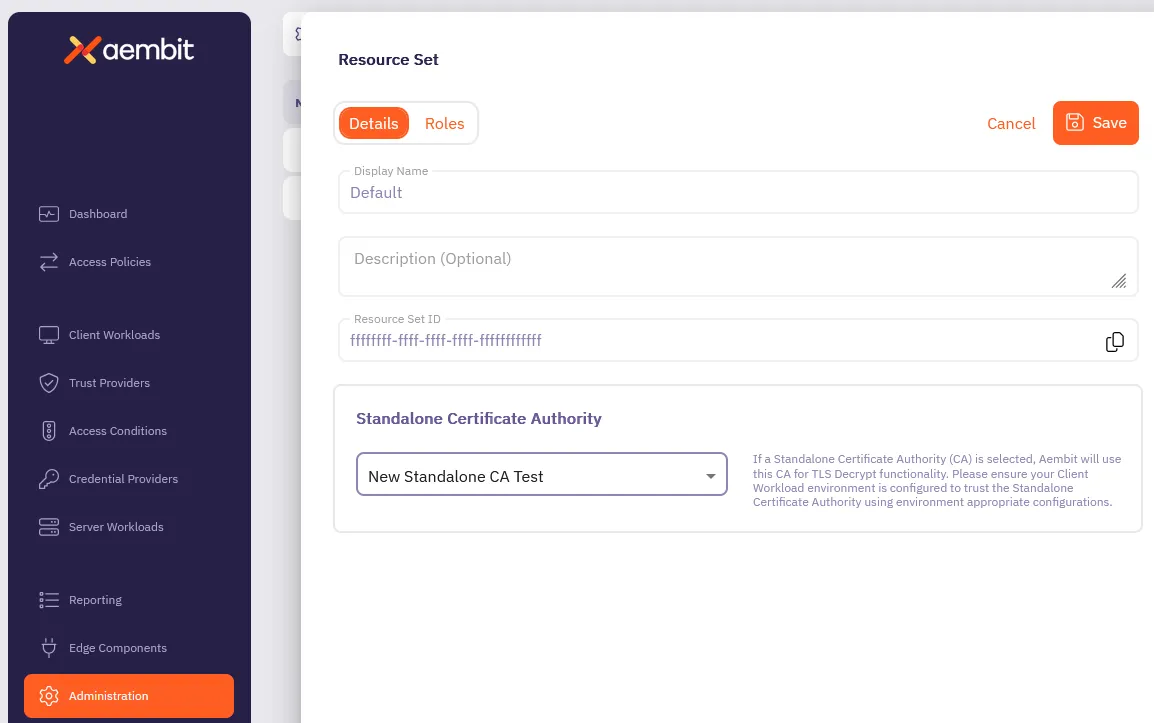

### Resource Set (optional)

[Section titled “Resource Set (optional)”](#resource-set-optional)

Aembit supports an optional feature where customers can segment their tenant into multiple isolated Resource Sets that do not interact. To manage access entities in custom Resource Sets, an additional HTTP Header value is required, `X-Aembit-ResourceSet`.

Note

If the `X-Aembit-ResourceSet` HTTP header is not specified, then the Aembit API will operate against the Default Resource Set (identified as `ffffffff-ffff-ffff-ffff-ffffffffffff`).

### REST API Request Example

[Section titled “REST API Request Example”](#rest-api-request-example)

A typical Aembit API request should look similar to the `curl` examples shown below:

```bash

curl -X GET -L 'https://tenant.aembit.io/api/v1/server-workloads' -H 'Authorization: Bearer '

curl -X GET -L 'https://tenant.aembit.io/api/v1/server-workloads' -H 'Authorization: Bearer ' -H 'X-Aembit-ResourceSet: ffffffff-ffff-ffff-ffff-ffffffffffff'

```

Where:

* `GET` is the type of request being made

* `https://tenant.aembit.io/api` is the Base URL

* `v1` is the API version

* `server-workloads` is the resource being called

## REST API Response

[Section titled “REST API Response”](#rest-api-response)

Every REST API response includes a HTTP Status Code and a response body. Successful responses will typically include a response body with the `Content-Type: application/json` and associated, structured data.

### Status Codes

[Section titled “Status Codes”](#status-codes)

Whenever you use the Aembit REST API, you will receive a HTTP status code when your request has been processed. The type of status code you receive depends on whether your request was successful or not.

The Aembit API uses standard HTTP Status Codes to denote whether a request has been successfully processed. Generally, you will see three types of status codes returned after you make a request.

* **2XX Codes** - If you request is successful, you will receive a 2XX error response code (e.g. 200, 201, etc).

* **4xx Codes** - If your request is not successful, and there is an client error in your request, you will receive a 4XX error response code (e.g. 401, 403, 404, etc).

* **5xx Codes** - If you request is not successful, and there is a server error, you will receive a 5XX error response code (e.g. 500, 502, 503, etc).

Note

For a full list of HTTP Status Codes, please see the [REST API Tutorial Status Codes](https://www.restapitutorial.com/httpstatuscodes) page.

## OpenAPI YAML

[Section titled “OpenAPI YAML”](#openapi-yaml)

Specific details about the Aembit API are available in the sections on the left and grouped based on the associated Aembit entities or features. However, in some cases, you may want the original OpenAPI document for importing into a code generator which can be downloaded with the link below.

[Download Aembit API OpenAPI Document](/astro/cloud.yaml)

# API Reference

> Aembit Cloud API Reference

# Aembit.API - API Endpoints

> API endpoints reference for Aembit.API

# Aembit.API - API Endpoints

[Section titled “Aembit.API - API Endpoints”](#aembitapi---api-endpoints)

**Version:** v1

**Base URL:** https\://{tenant}.aembit.io

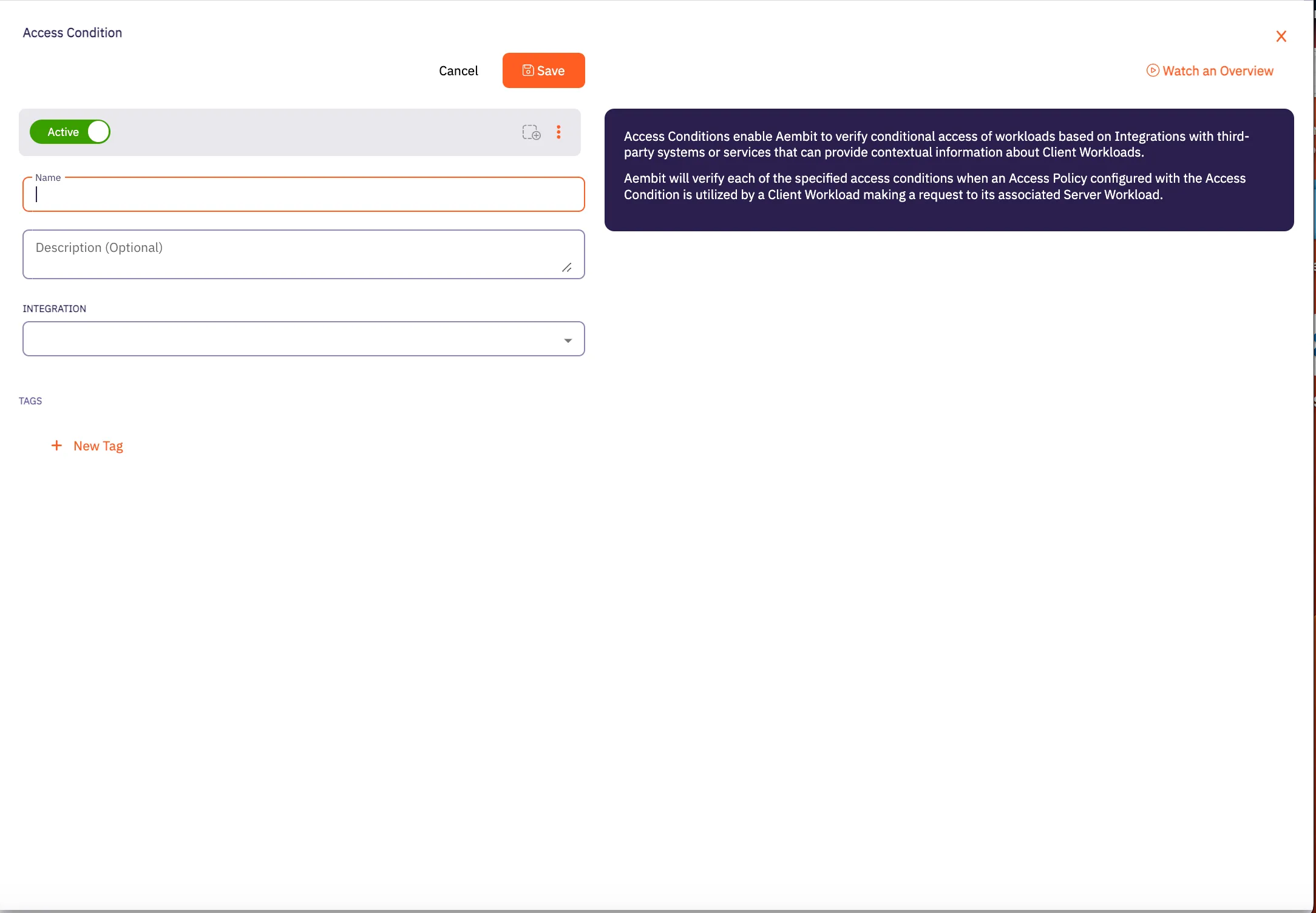

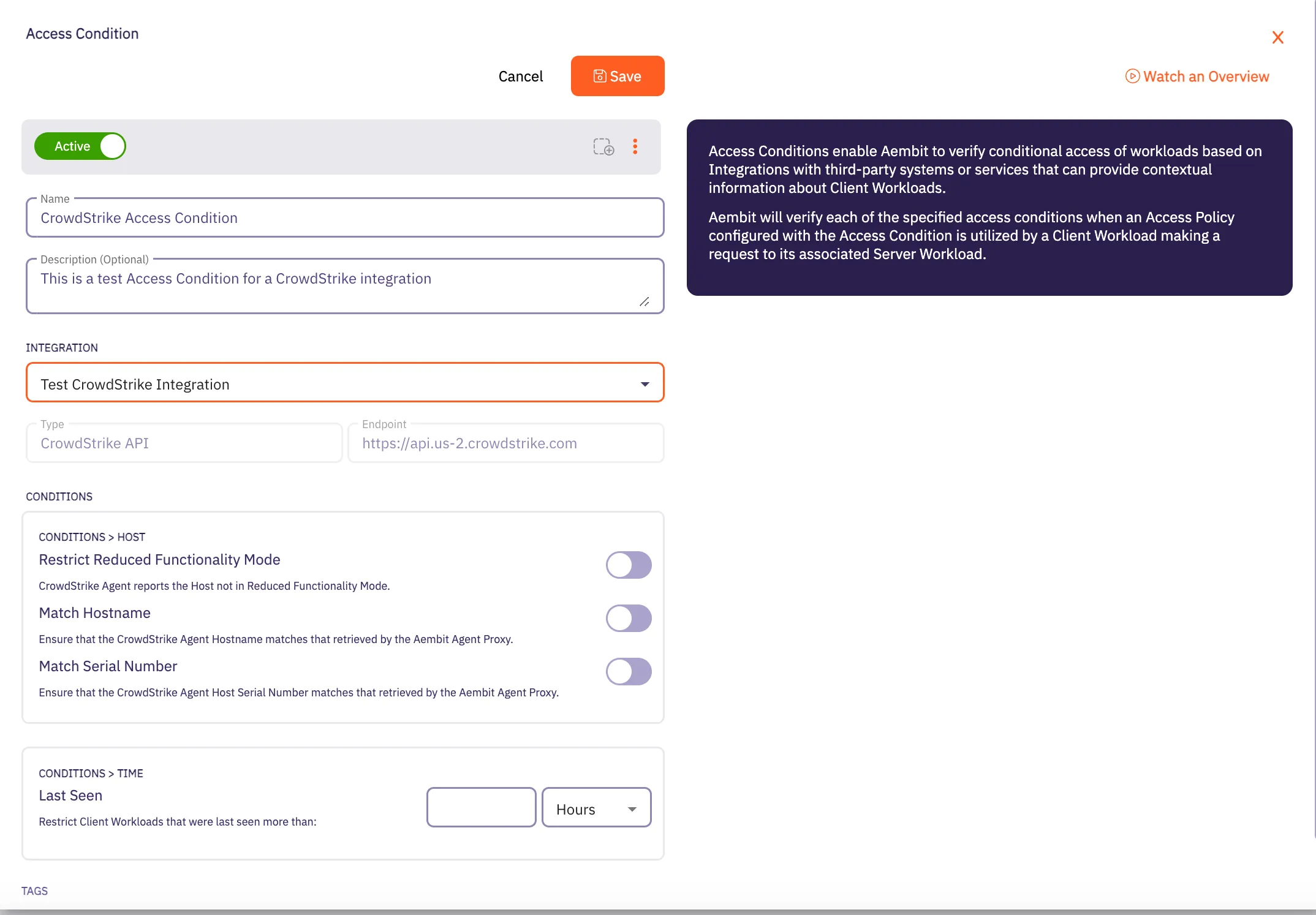

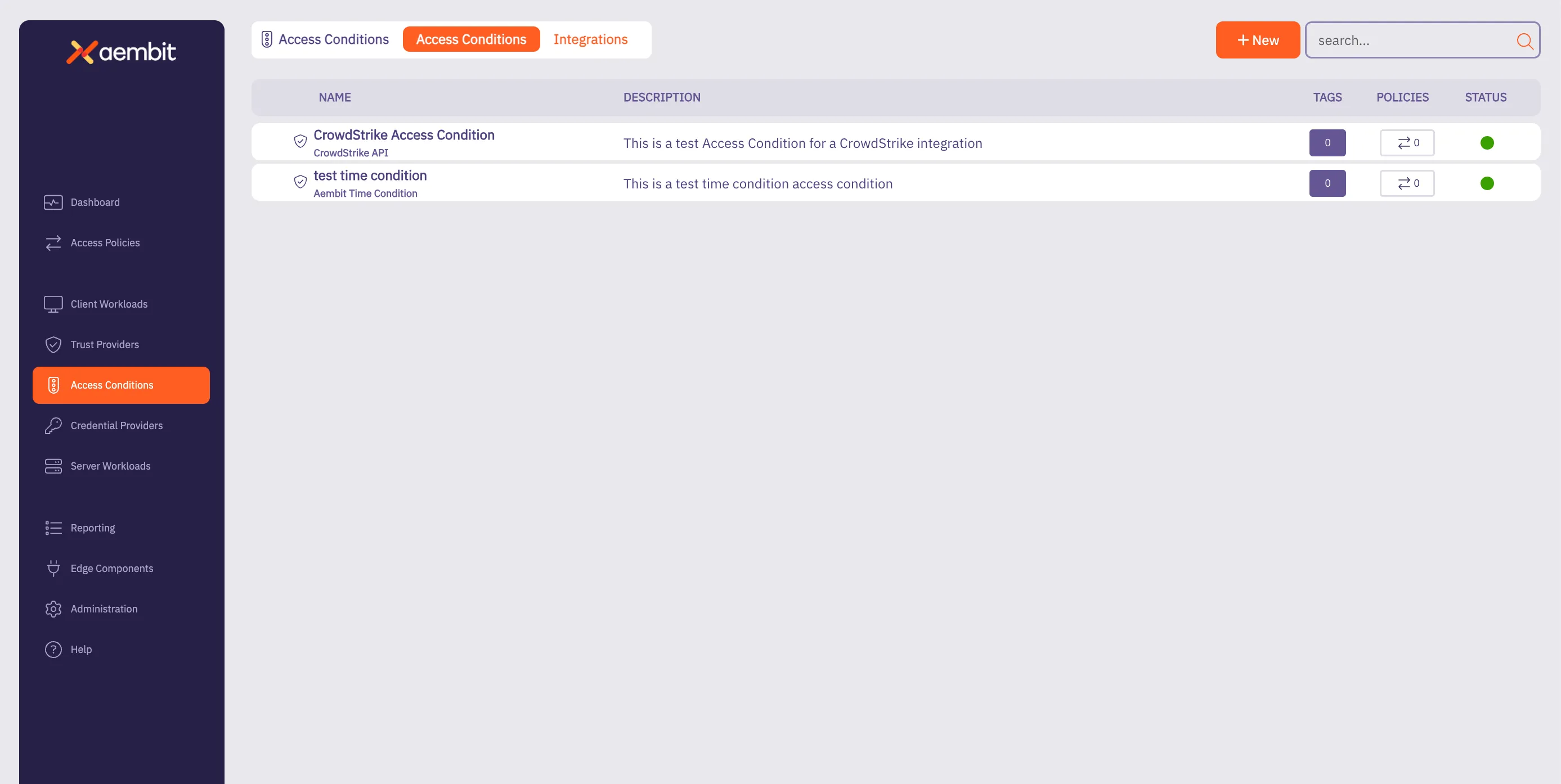

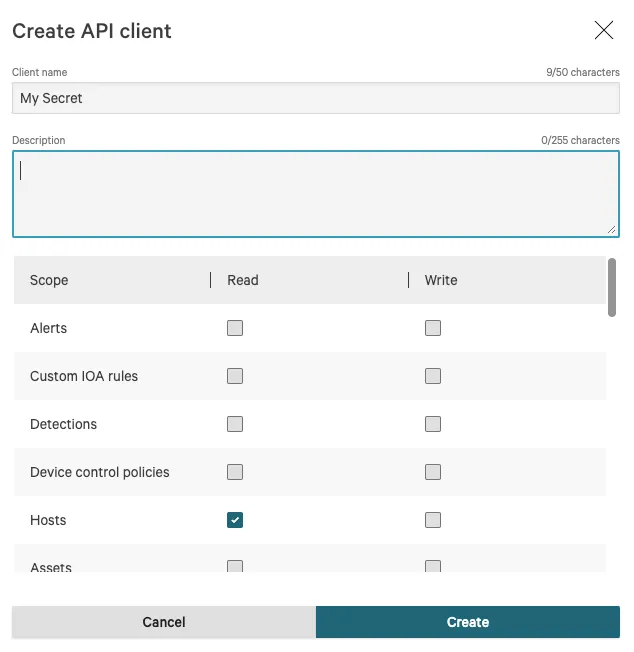

## Access Condition

[Section titled “Access Condition”](#access-condition)

### GET /api/v1/access-conditions

[Section titled “GET /api/v1/access-conditions”](#get-apiv1access-conditions)

**Summary:** Get a page of Access Conditions

**Description:** Retrieve a page of Aembit Access Conditions.

**Operation ID:** get-access-conditions

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

* **page** (undefined) *(optional)*: any

* **per-page** (undefined) *(optional)*: any

* **filter** (undefined) *(optional)*: any

* **order** (undefined) *(optional)*: any

* **group-by** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Page of Access Conditions

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io/api/v1/access-conditions"

```

### POST /api/v1/access-conditions

[Section titled “POST /api/v1/access-conditions”](#post-apiv1access-conditions)

**Summary:** Create an Access Condition

**Description:** Create an Aembit Access Condition which can then be associated with an Access Policy.

**Operation ID:** post-access-condition

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** AccessConditionDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘201’**: Successfully created Access Condition

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X POST \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v1/access-conditions"

```

### PUT /api/v1/access-conditions

[Section titled “PUT /api/v1/access-conditions”](#put-apiv1access-conditions)

**Summary:** Update a single Access Condition

**Description:** Update a specific Access Condition identified by its ID.

**Operation ID:** put-access-condition

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** AccessConditionDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Successfully updated Access Condition

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PUT \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v1/access-conditions"

```

### GET ‘/api/v1/access-conditions/{id}’

[Section titled “GET ‘/api/v1/access-conditions/{id}’”](#get-apiv1access-conditionsid)

**Summary:** Get the identified Access Condition

**Description:** Get the Access Condition identified by its ID.

**Operation ID:** get-access-condition

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Access Condition

* Content-Type: application/json

* Schema: any

* **‘204’**: Access Condition Not Found

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v1/access-conditions/{id}'"

```

### DELETE ‘/api/v1/access-conditions/{id}’

[Section titled “DELETE ‘/api/v1/access-conditions/{id}’”](#delete-apiv1access-conditionsid)

**Summary:** Delete a single Access Condition

**Description:** Delete a specific Access Condition identified by its ID.

**Operation ID:** delete-access-condition

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘204’**: Deleted the Access Condition

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘404’**: Not Found

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X DELETE \

"https://your-tenant.aembit.io'/api/v1/access-conditions/{id}'"

```

### PATCH ‘/api/v1/access-conditions/{id}’

[Section titled “PATCH ‘/api/v1/access-conditions/{id}’”](#patch-apiv1access-conditionsid)

**Summary:** Patch a single Access Condition

**Description:** Patch a specific Access Condition identified by its ID.

**Operation ID:** patch-access-condition

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** AccessConditionPatchDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Successfully updated Access Condition

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PATCH \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io'/api/v1/access-conditions/{id}'"

```

## Access Policy (Deprecated)

[Section titled “Access Policy (Deprecated)”](#access-policy-deprecated)

### GET ‘/api/v1/access-policies/{id}’

[Section titled “GET ‘/api/v1/access-policies/{id}’”](#get-apiv1access-policiesid)

**Summary:** Get the identified Access Policy

**Description:** Get the Access Policy identified by its ID.

**Operation ID:** get-access-policy

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Access Policy

* Content-Type: application/json

* Schema: any

* **‘204’**: Access Policy Not Found

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v1/access-policies/{id}'"

```

### DELETE ‘/api/v1/access-policies/{id}’

[Section titled “DELETE ‘/api/v1/access-policies/{id}’”](#delete-apiv1access-policiesid)

**Summary:** Delete an Access Policy

**Description:** Delete an Access Policy.

**Operation ID:** delete-access-policy

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘204’**: Deleted the Access Policy

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X DELETE \

"https://your-tenant.aembit.io'/api/v1/access-policies/{id}'"

```

### PATCH ‘/api/v1/access-policies/{id}’

[Section titled “PATCH ‘/api/v1/access-policies/{id}’”](#patch-apiv1access-policiesid)

**Summary:** Patch an Access Policy

**Description:** Patch an Access Policy.

**Operation ID:** patch-access-policy

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** PolicyPatchDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Patched Access Policy

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PATCH \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io'/api/v1/access-policies/{id}'"

```

### GET ‘/api/v1/access-policies/getByWorkloadIds/{clientWorkloadId}/{serverWorkloadId}’

[Section titled “GET ‘/api/v1/access-policies/getByWorkloadIds/{clientWorkloadId}/{serverWorkloadId}’”](#get-apiv1access-policiesgetbyworkloadidsclientworkloadidserverworkloadid)

**Summary:** Get the identified Access Policy

**Description:** Get the Access Policy identified by a Client and Server Workload.

**Operation ID:** get-access-policy-by-workloads

**Parameters:**

* **clientWorkloadId** (undefined) *(optional)*: any

* **serverWorkloadId** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Access Policy

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v1/access-policies/getByWorkloadIds/{clientWorkloadId}/{serverWorkloadId}'"

```

### GET /api/v1/access-policies

[Section titled “GET /api/v1/access-policies”](#get-apiv1access-policies)

**Summary:** Get a page of Access Policies

**Description:** Retrieve a page of Access Policies.

**Operation ID:** get-access-policies

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

* **page** (undefined) *(optional)*: any

* **per-page** (undefined) *(optional)*: any

* **filter** (undefined) *(optional)*: any

* **order** (undefined) *(optional)*: any

* **group-by** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Page of Access Policies

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io/api/v1/access-policies"

```

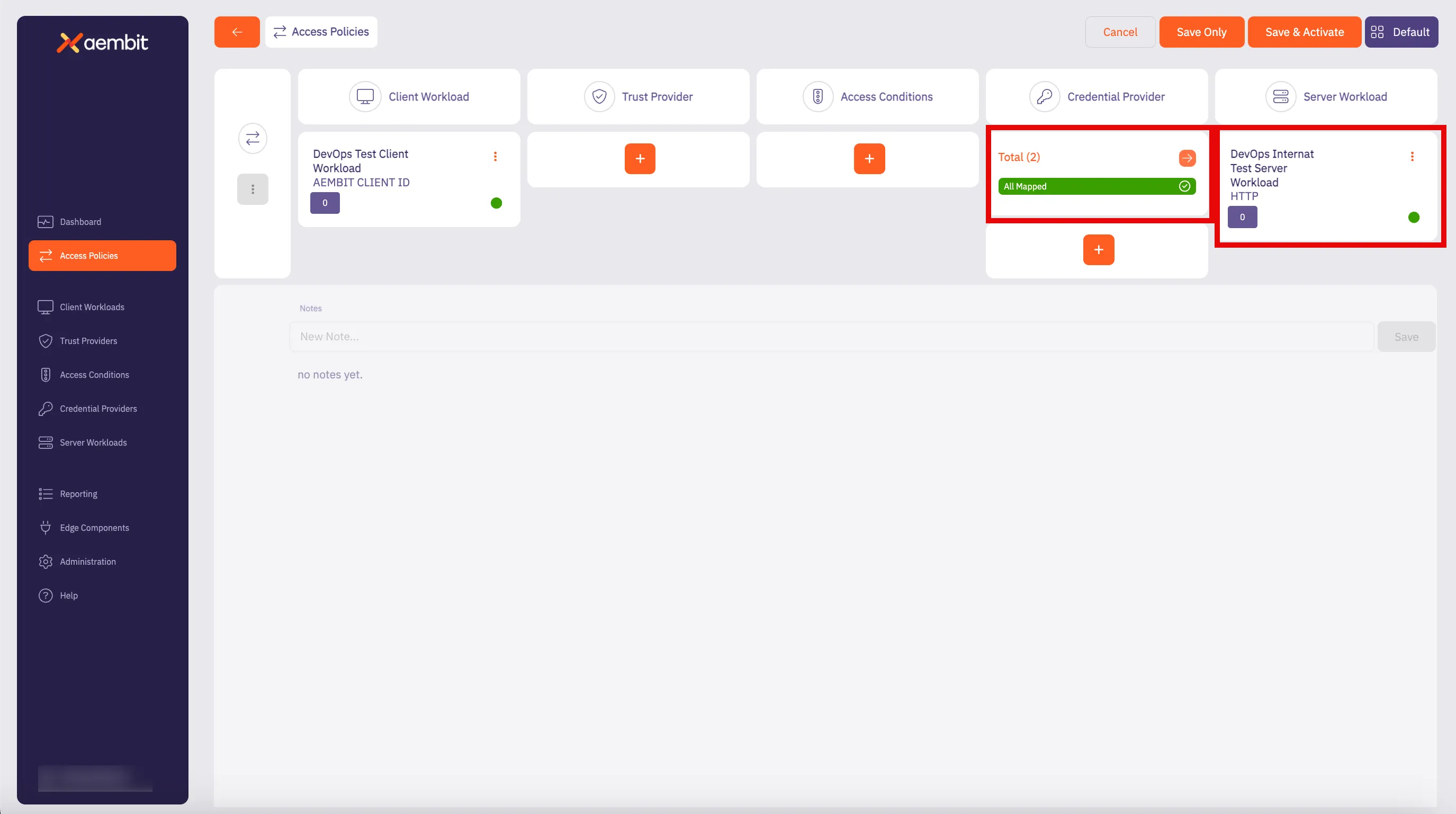

### POST /api/v1/access-policies

[Section titled “POST /api/v1/access-policies”](#post-apiv1access-policies)

**Summary:** Create an Access Policy

**Description:** Create an Access Policy.

**Operation ID:** post-access-policy

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** PolicyDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Created Access Policy

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X POST \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v1/access-policies"

```

### PUT /api/v1/access-policies

[Section titled “PUT /api/v1/access-policies”](#put-apiv1access-policies)

**Summary:** Update an Access Policy

**Description:** Update an Access Policy.

**Operation ID:** put-access-policy

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** PolicyDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Updated Access Policy

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PUT \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v1/access-policies"

```

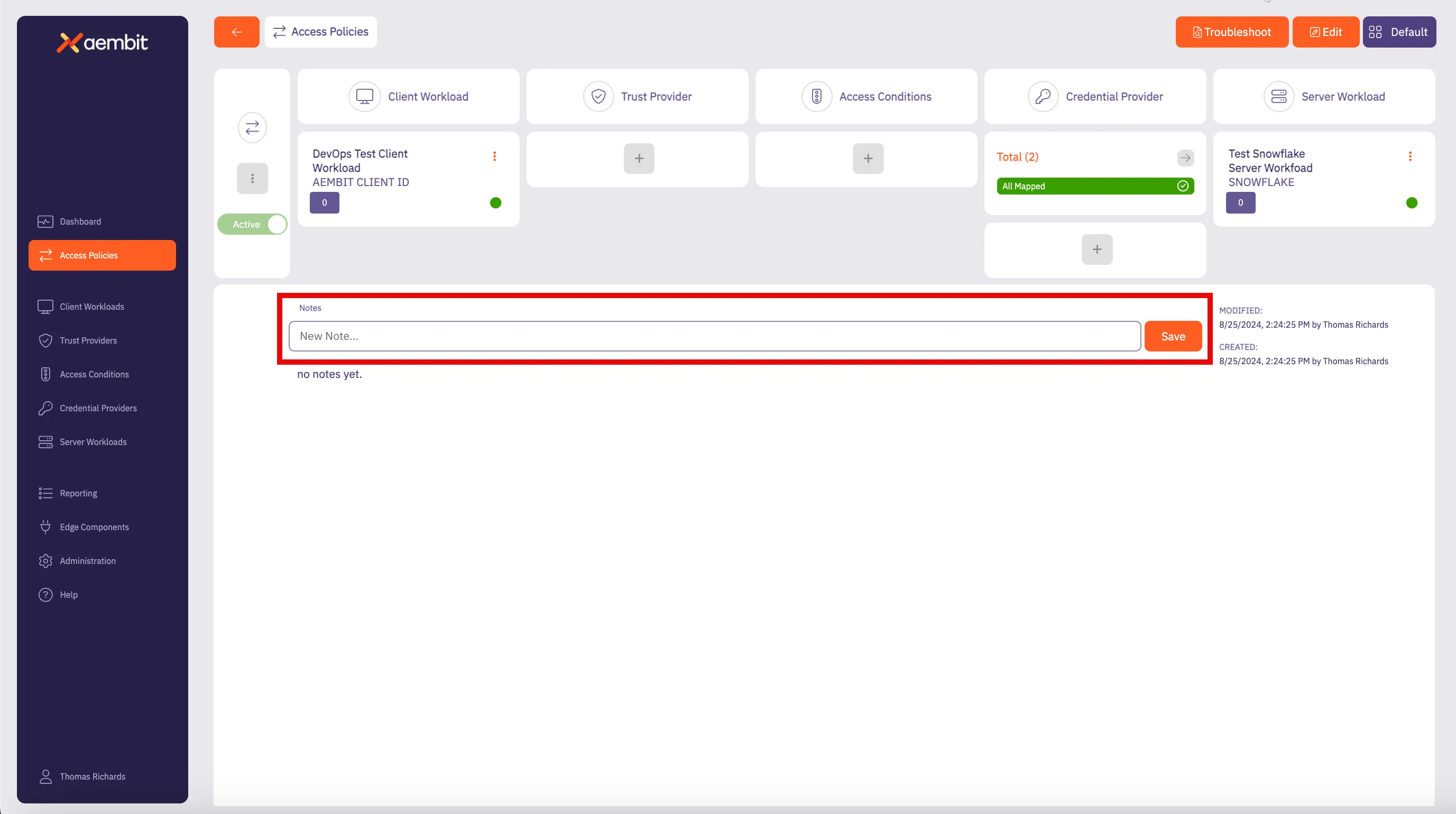

### POST ‘/api/v1/access-policies/{id}/notes’

[Section titled “POST ‘/api/v1/access-policies/{id}/notes’”](#post-apiv1access-policiesidnotes)

**Summary:** Add a note to an Access Policy

**Description:** Add a note to an Access Policy.

**Operation ID:** post-access-policy-note

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** PolicyNoteDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘201’**: Note added to an Access Policy

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X POST \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io'/api/v1/access-policies/{id}/notes'"

```

## Access Policy v2

[Section titled “Access Policy v2”](#access-policy-v2)

### GET ‘/api/v2/access-policies/{id}’

[Section titled “GET ‘/api/v2/access-policies/{id}’”](#get-apiv2access-policiesid)

**Summary:** Get the identified Access Policy

**Description:** Get the Access Policy identified by its ID.

**Operation ID:** get-access-policy-v2

**Parameters:**

* **id** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Access Policy

* Content-Type: application/json

* Schema: any

* **‘204’**: Access Policy Not Found

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v2/access-policies/{id}'"

```

### DELETE ‘/api/v2/access-policies/{id}’

[Section titled “DELETE ‘/api/v2/access-policies/{id}’”](#delete-apiv2access-policiesid)

**Summary:** Delete an Access Policy

**Description:** Delete an Access Policy.

**Operation ID:** delete-access-policy-v2

**Parameters:**

* **id** (undefined) *(optional)*: any

**Responses:**

* **‘204’**: Deleted the Access Policy

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘404’**: Not Found

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X DELETE \

"https://your-tenant.aembit.io'/api/v2/access-policies/{id}'"

```

### PATCH ‘/api/v2/access-policies/{id}’

[Section titled “PATCH ‘/api/v2/access-policies/{id}’”](#patch-apiv2access-policiesid)

**Summary:** Patch an Access Policy

**Description:** Patch an Access Policy.

**Operation ID:** patch-access-policy-v2

**Parameters:**

* **id** (undefined) *(optional)*: any

**Request Body:** PatchPolicyV2DTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Patched Access Policy

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PATCH \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io'/api/v2/access-policies/{id}'"

```

### GET ‘/api/v2/access-policies/getByWorkloadIds/{clientWorkloadId}/{serverWorkloadId}’

[Section titled “GET ‘/api/v2/access-policies/getByWorkloadIds/{clientWorkloadId}/{serverWorkloadId}’”](#get-apiv2access-policiesgetbyworkloadidsclientworkloadidserverworkloadid)

**Summary:** Get the identified Access Policy

**Description:** Get the Access Policy identified by a Client and Server Workload.

**Operation ID:** get-access-policy-by-workloads-v2

**Parameters:**

* **clientWorkloadId** (undefined) *(optional)*: any

* **serverWorkloadId** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Access Policy

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v2/access-policies/getByWorkloadIds/{clientWorkloadId}/{serverWorkloadId}'"

```

### GET /api/v2/access-policies

[Section titled “GET /api/v2/access-policies”](#get-apiv2access-policies)

**Summary:** Get a page of Access Policies

**Description:** Retrieve a page of Access Policies.

**Operation ID:** get-access-policies-v2

**Parameters:**

* **page** (undefined) *(optional)*: any

* **per-page** (undefined) *(optional)*: any

* **filter** (undefined) *(optional)*: any

* **order** (undefined) *(optional)*: any

* **group-by** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Page of Access Policies

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io/api/v2/access-policies"

```

### POST /api/v2/access-policies

[Section titled “POST /api/v2/access-policies”](#post-apiv2access-policies)

**Summary:** Create an Access Policy

**Description:** Create an Access Policy.

**Operation ID:** post-access-policy-v2

**Request Body:** CreatePolicyDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Created Access Policy

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X POST \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v2/access-policies"

```

### PUT /api/v2/access-policies

[Section titled “PUT /api/v2/access-policies”](#put-apiv2access-policies)

**Summary:** Update an Access Policy

**Description:** Update an Access Policy.

**Operation ID:** put-access-policy-v2

**Request Body:** CreatePolicyDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Updated Access Policy

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PUT \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v2/access-policies"

```

### POST ‘/api/v2/access-policies/{id}/notes’

[Section titled “POST ‘/api/v2/access-policies/{id}/notes’”](#post-apiv2access-policiesidnotes)

**Summary:** Add a note to an Access Policy

**Description:** Add a note to an Access Policy.

**Operation ID:** post-access-policy-note-v2

**Parameters:**

* **id** (undefined) *(optional)*: any

**Request Body:** PolicyNoteDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘201’**: Note added to an Access Policy

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X POST \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io'/api/v2/access-policies/{id}/notes'"

```

### GET ‘/api/v2/access-policies/{id}/notes’

[Section titled “GET ‘/api/v2/access-policies/{id}/notes’”](#get-apiv2access-policiesidnotes)

**Summary:** Gets a notes of Access Policy

**Description:** Retrieves notes of Access Policy.

**Operation ID:** get-access-policy-notes-v2

**Parameters:**

* **id** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Page of Access Policy Notes

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v2/access-policies/{id}/notes'"

```

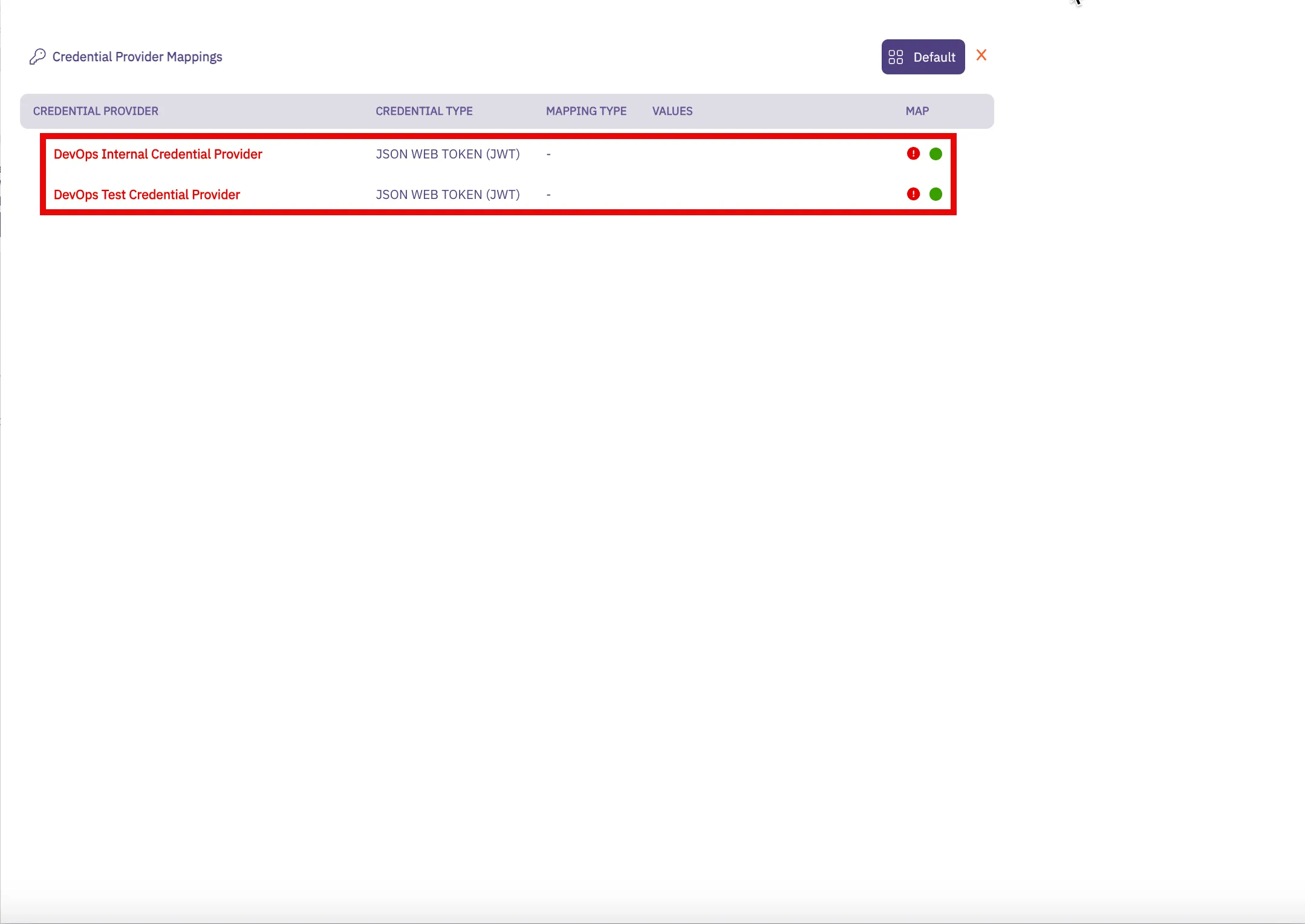

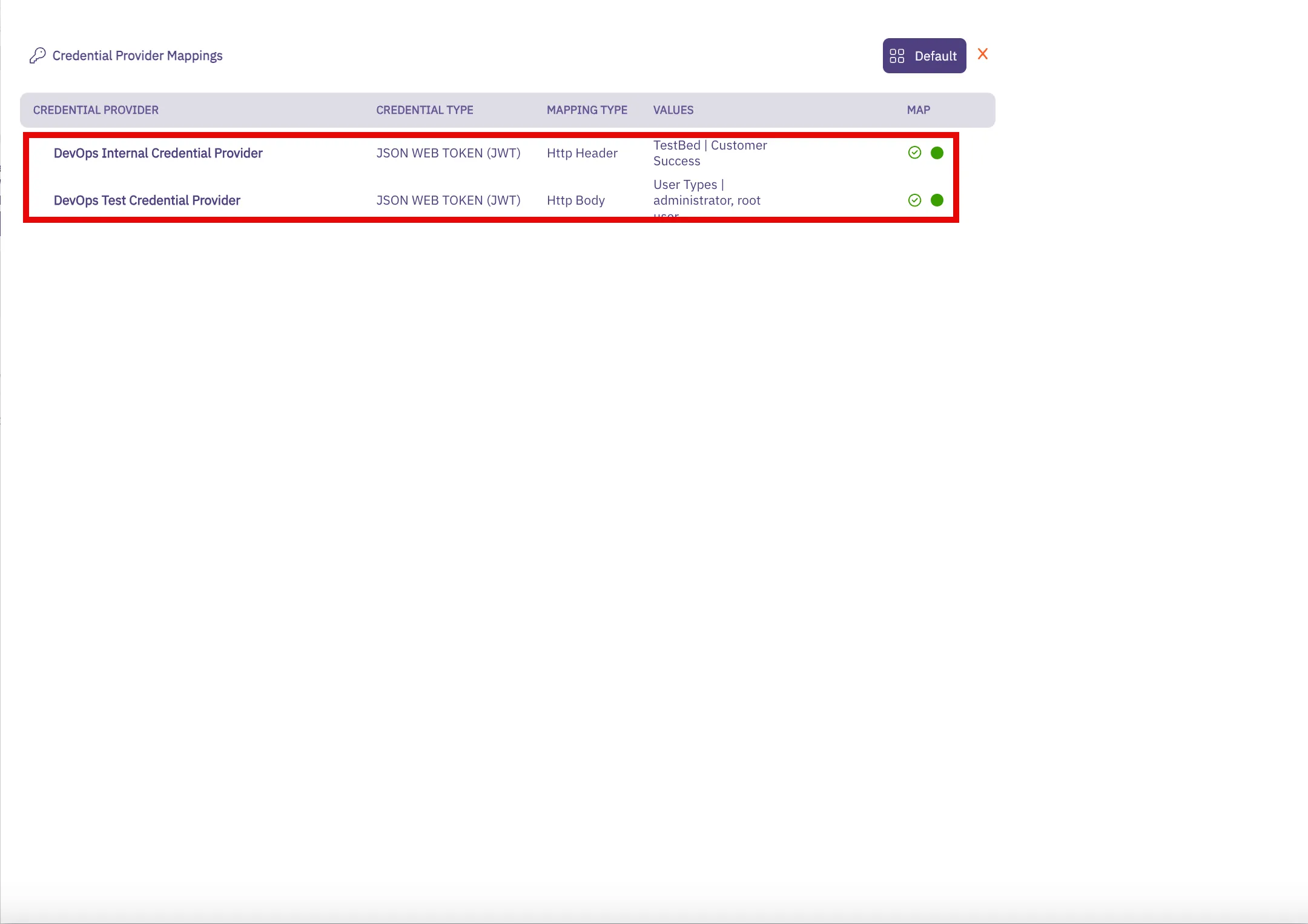

### GET ‘/api/v2/access-policies/{id}/credential-mappings’

[Section titled “GET ‘/api/v2/access-policies/{id}/credential-mappings’”](#get-apiv2access-policiesidcredential-mappings)

**Summary:** Gets a credential mappings of Access Policy

**Description:** Retrieves credential mappings of Access Policy.

**Operation ID:** get-access-policy-credential-mappings-v2

**Parameters:**

* **id** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Page of Credential Mappings

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v2/access-policies/{id}/credential-mappings'"

```

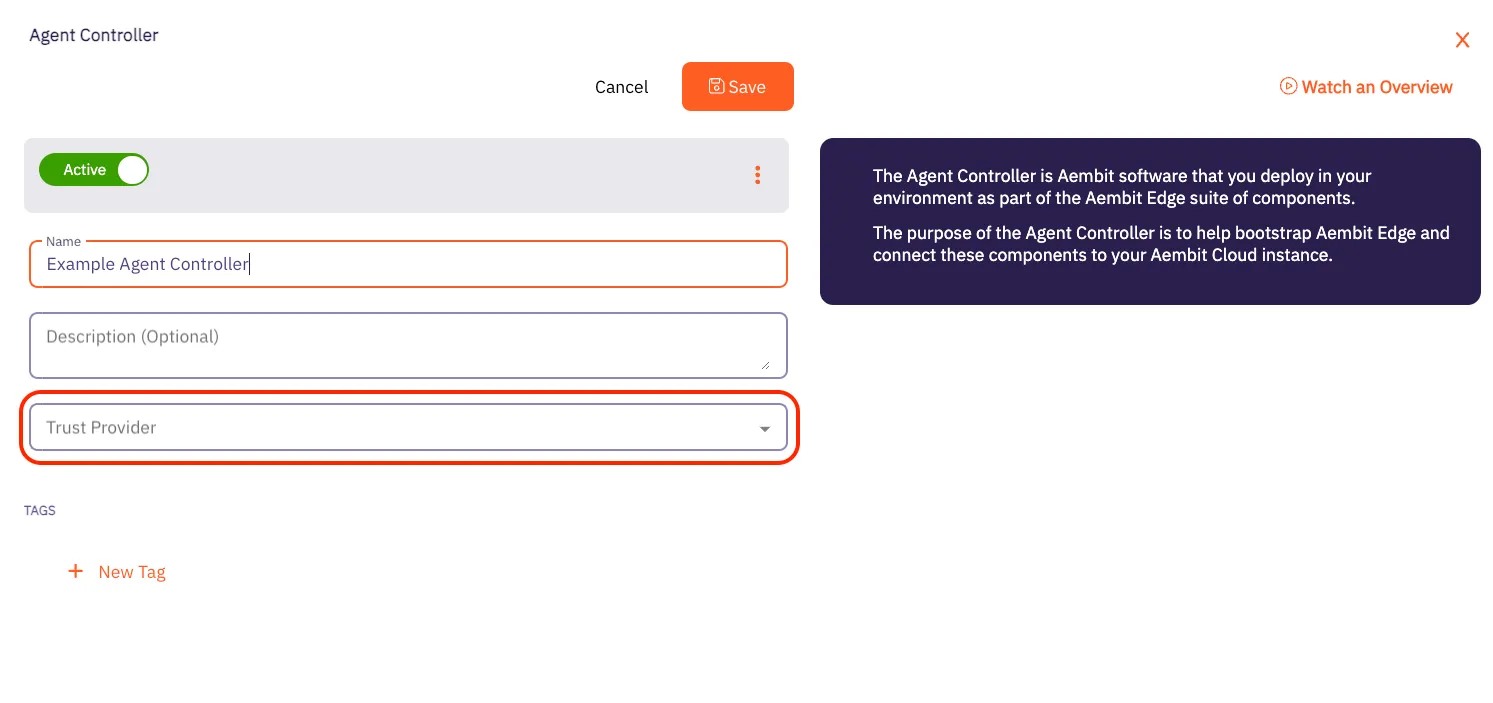

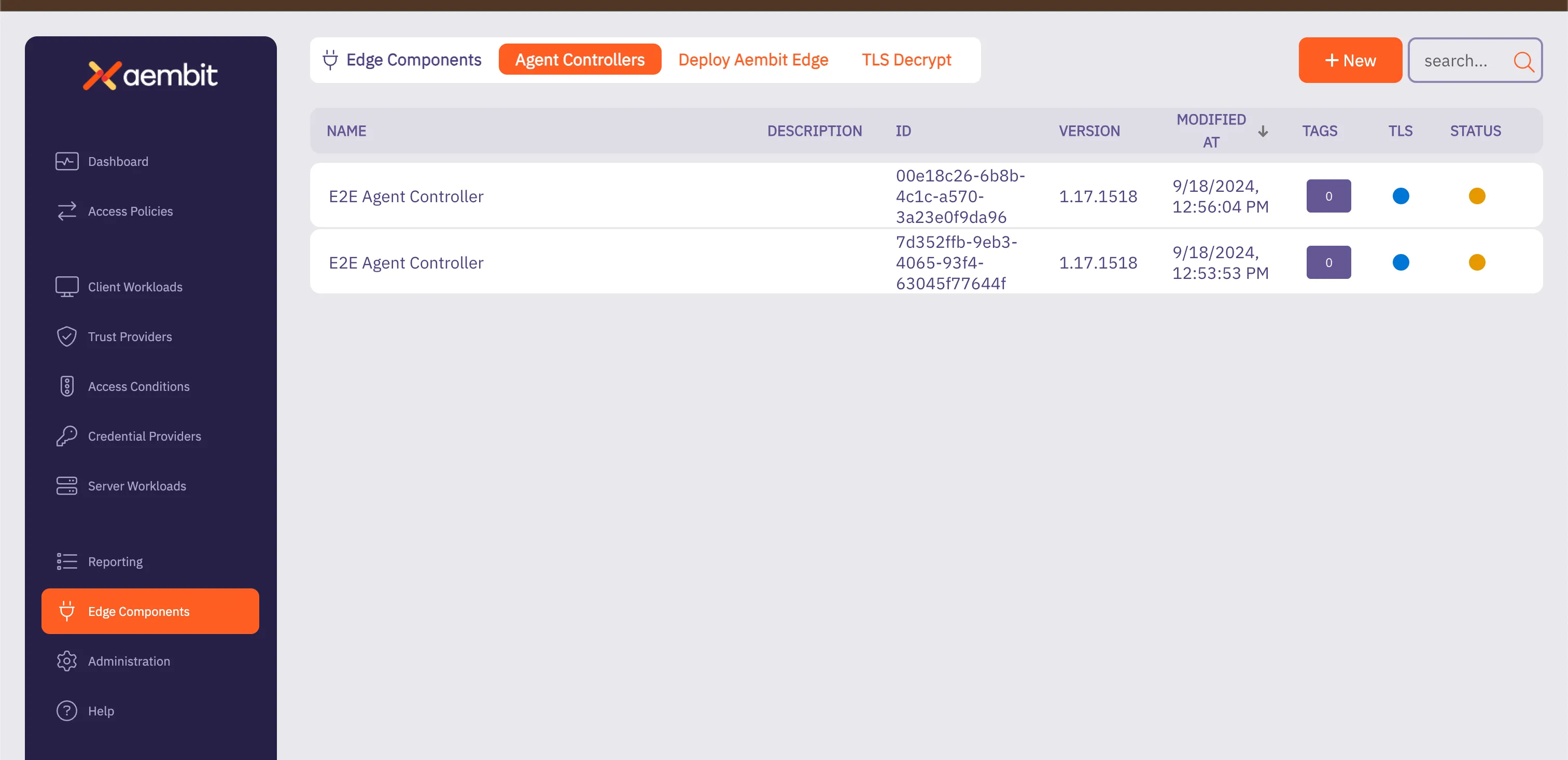

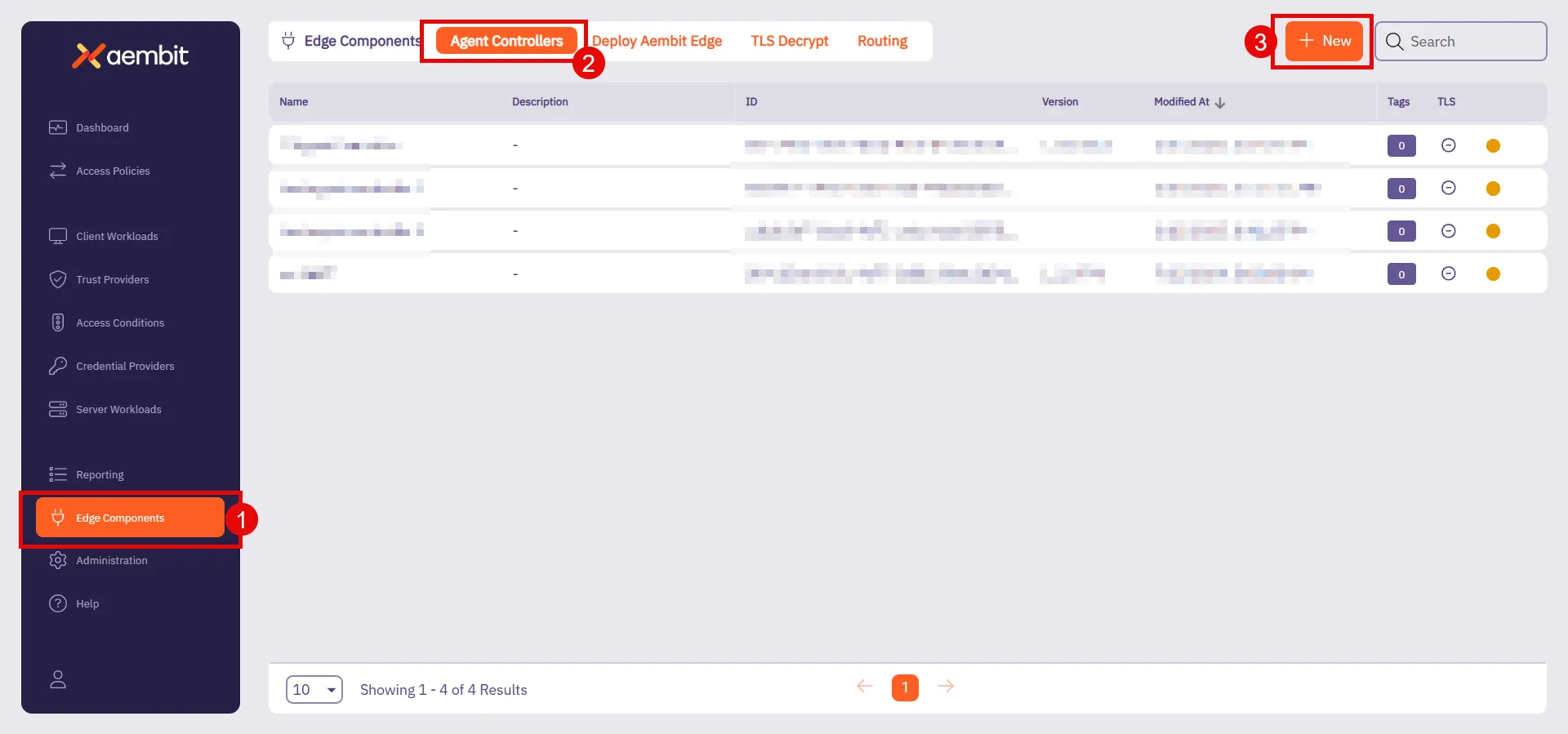

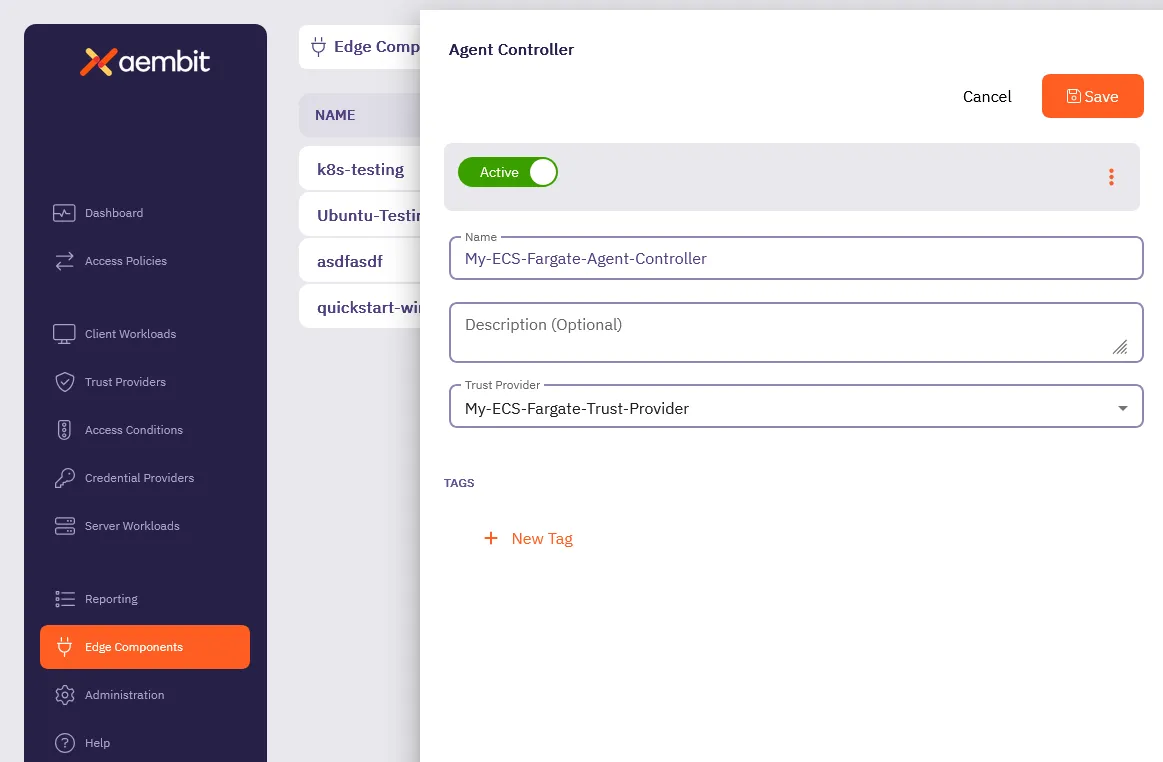

## Agent Controller

[Section titled “Agent Controller”](#agent-controller)

### GET /api/v1/agent-controllers

[Section titled “GET /api/v1/agent-controllers”](#get-apiv1agent-controllers)

**Summary:** Get a page of Agent Controllers

**Description:** Get a page of Agent Controllers.

**Operation ID:** get-agent-controllers

**Parameters:**

* **page** (undefined) *(optional)*: any

* **per-page** (undefined) *(optional)*: any

* **filter** (undefined) *(optional)*: any

* **order** (undefined) *(optional)*: any

* **group-by** (undefined) *(optional)*: any

* **check-tls-type** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Page of Agent Controllers

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io/api/v1/agent-controllers"

```

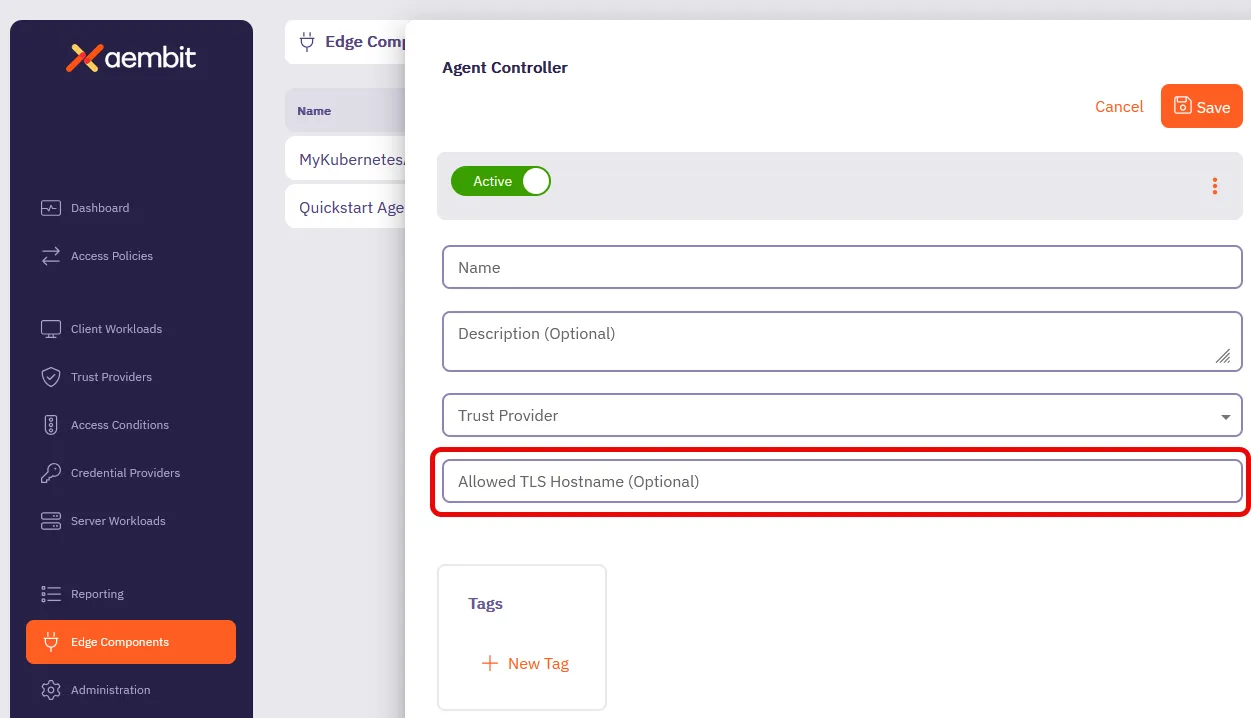

### POST /api/v1/agent-controllers

[Section titled “POST /api/v1/agent-controllers”](#post-apiv1agent-controllers)

**Summary:** Create an Agent Controller

**Description:** Create an Agent Controller.

**Operation ID:** post-agent-controller

**Request Body:** AgentControllerDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘201’**: Created Agent Controller

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X POST \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v1/agent-controllers"

```

### PUT /api/v1/agent-controllers

[Section titled “PUT /api/v1/agent-controllers”](#put-apiv1agent-controllers)

**Summary:** Update an Agent Controller

**Description:** Update an Agent Controller.

**Operation ID:** put-agent-controller

**Request Body:** AgentControllerDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Updated Agent Controller

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PUT \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v1/agent-controllers"

```

### GET ‘/api/v1/agent-controllers/{id}’

[Section titled “GET ‘/api/v1/agent-controllers/{id}’”](#get-apiv1agent-controllersid)

**Summary:** Get an Agent Controller

**Description:** Get an Agent Controller identified by its ID.

**Operation ID:** get-agent-controller

**Parameters:**

* **id** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Agent Controller

* Content-Type: application/json

* Schema: any

* **‘204’**: Agent Controller Not Found

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v1/agent-controllers/{id}'"

```

### PATCH ‘/api/v1/agent-controllers/{id}’

[Section titled “PATCH ‘/api/v1/agent-controllers/{id}’”](#patch-apiv1agent-controllersid)

**Summary:** Patch an Agent Controller

**Description:** Patch an Agent Controller identified by its ID.

**Operation ID:** patch-agent-controller

**Parameters:**

* **id** (undefined) *(optional)*: any

**Request Body:** AgentControllerPatchDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Patched Agent Controller

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PATCH \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io'/api/v1/agent-controllers/{id}'"

```

### DELETE ‘/api/v1/agent-controllers/{id}’

[Section titled “DELETE ‘/api/v1/agent-controllers/{id}’”](#delete-apiv1agent-controllersid)

**Summary:** Delete an Agent Controller

**Description:** Delete an Agent Controller identified by its ID.

**Operation ID:** delete-agent-controller

**Parameters:**

* **id** (undefined) *(optional)*: any

**Responses:**

* **‘201’**: Successfully deleted Agent Controller

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X DELETE \

"https://your-tenant.aembit.io'/api/v1/agent-controllers/{id}'"

```

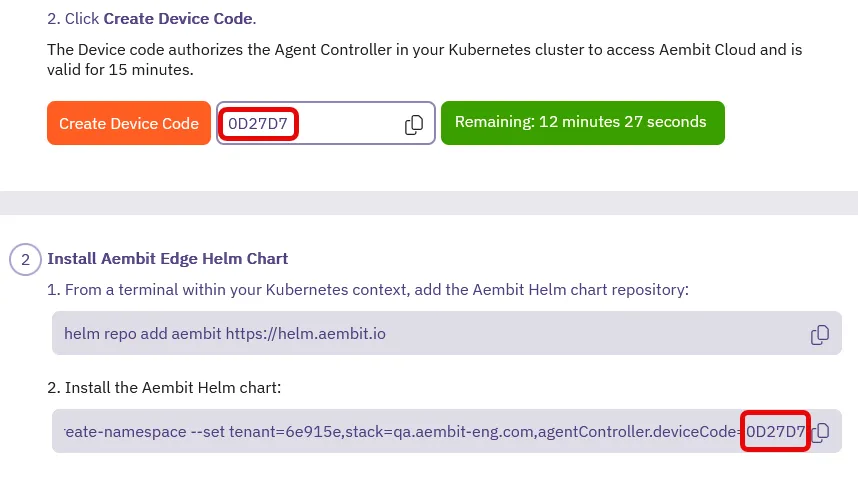

### POST ‘/api/v1/agent-controllers/{agentControllerExternalId}/device-code’

[Section titled “POST ‘/api/v1/agent-controllers/{agentControllerExternalId}/device-code’”](#post-apiv1agent-controllersagentcontrollerexternaliddevice-code)

**Summary:** Generate a Device Code for an Agent Controller

**Description:** Generate a Device Code for an Agent Controller.

**Operation ID:** post-agent-controller-device-code

**Parameters:**

* **agentControllerExternalId** (undefined) *(optional)*: any

**Responses:**

* **‘201’**: Agent Controller Device Code

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X POST \

"https://your-tenant.aembit.io'/api/v1/agent-controllers/{agentControllerExternalId}/device-code'"

```

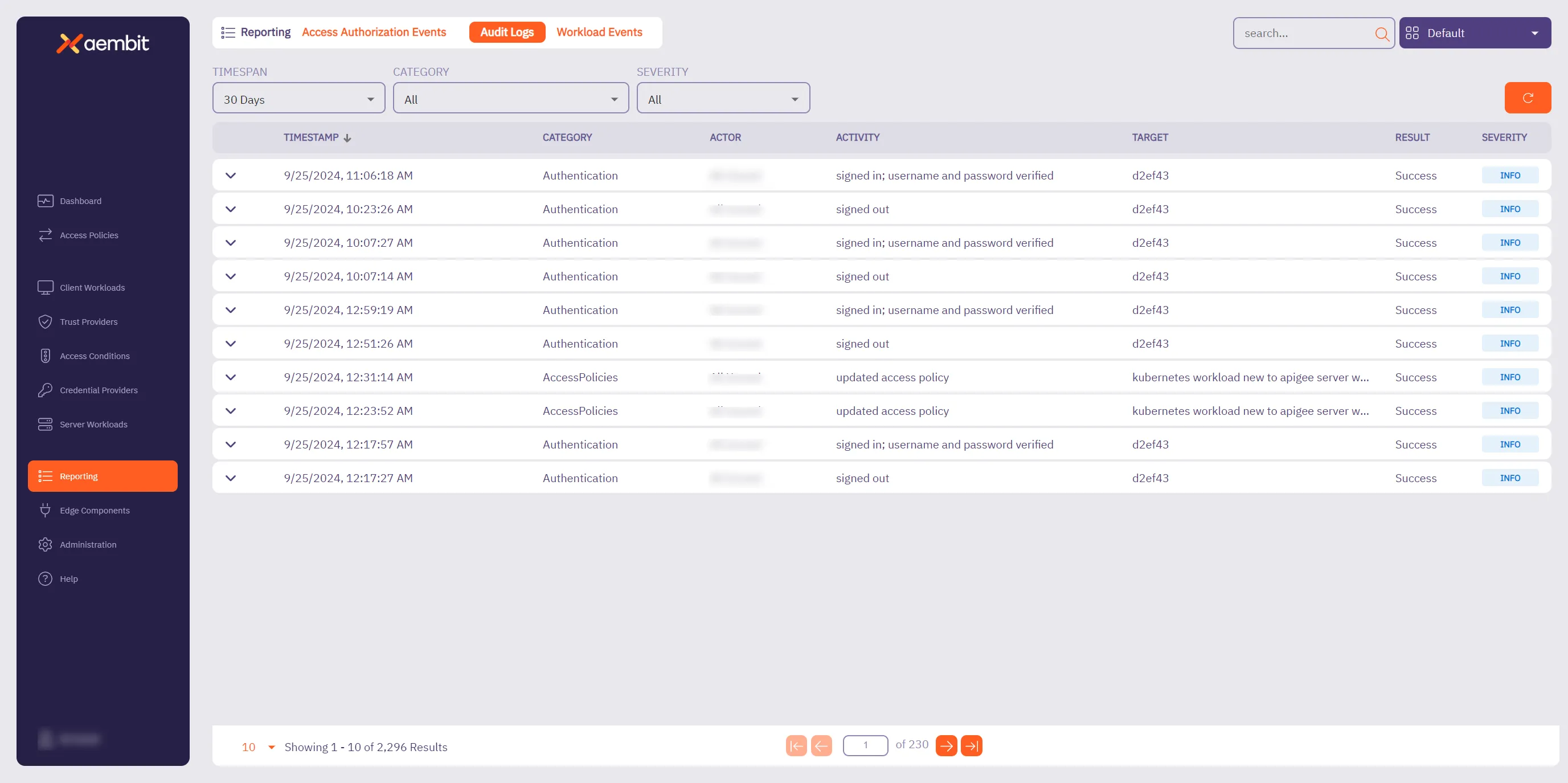

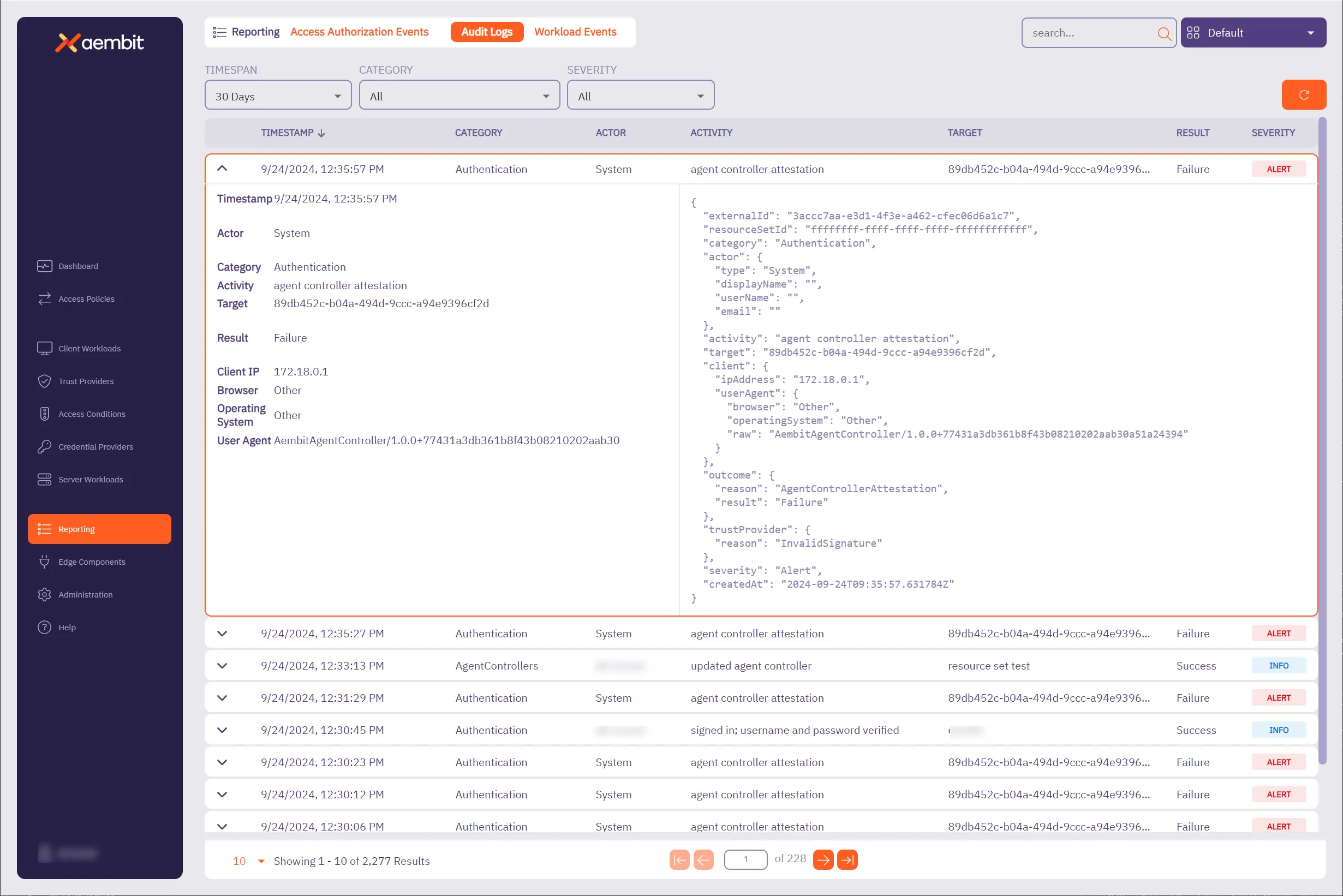

## Audit Log

[Section titled “Audit Log”](#audit-log)

### GET /api/v1/audit-logs

[Section titled “GET /api/v1/audit-logs”](#get-apiv1audit-logs)

**Summary:** Get a page of Audit Log events

**Description:** Get a page of Audit Log events.

**Operation ID:** get-audit-logs

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

* **page** (undefined) *(optional)*: any

* **per-page** (undefined) *(optional)*: any

* **order** (undefined) *(optional)*: any

* **search** (undefined) *(optional)*: any

* **span-last-days** (undefined) *(optional)*: any

* **category** (undefined) *(optional)*: any

* **severity** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Page of Audit Logs

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io/api/v1/audit-logs"

```

### GET ‘/api/v1/audit-logs/{id}’

[Section titled “GET ‘/api/v1/audit-logs/{id}’”](#get-apiv1audit-logsid)

**Summary:** Get an Audit Log event

**Description:** Get an Audit Log event identified by its ID.

**Operation ID:** get-audit-log

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Audit Log

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v1/audit-logs/{id}'"

```

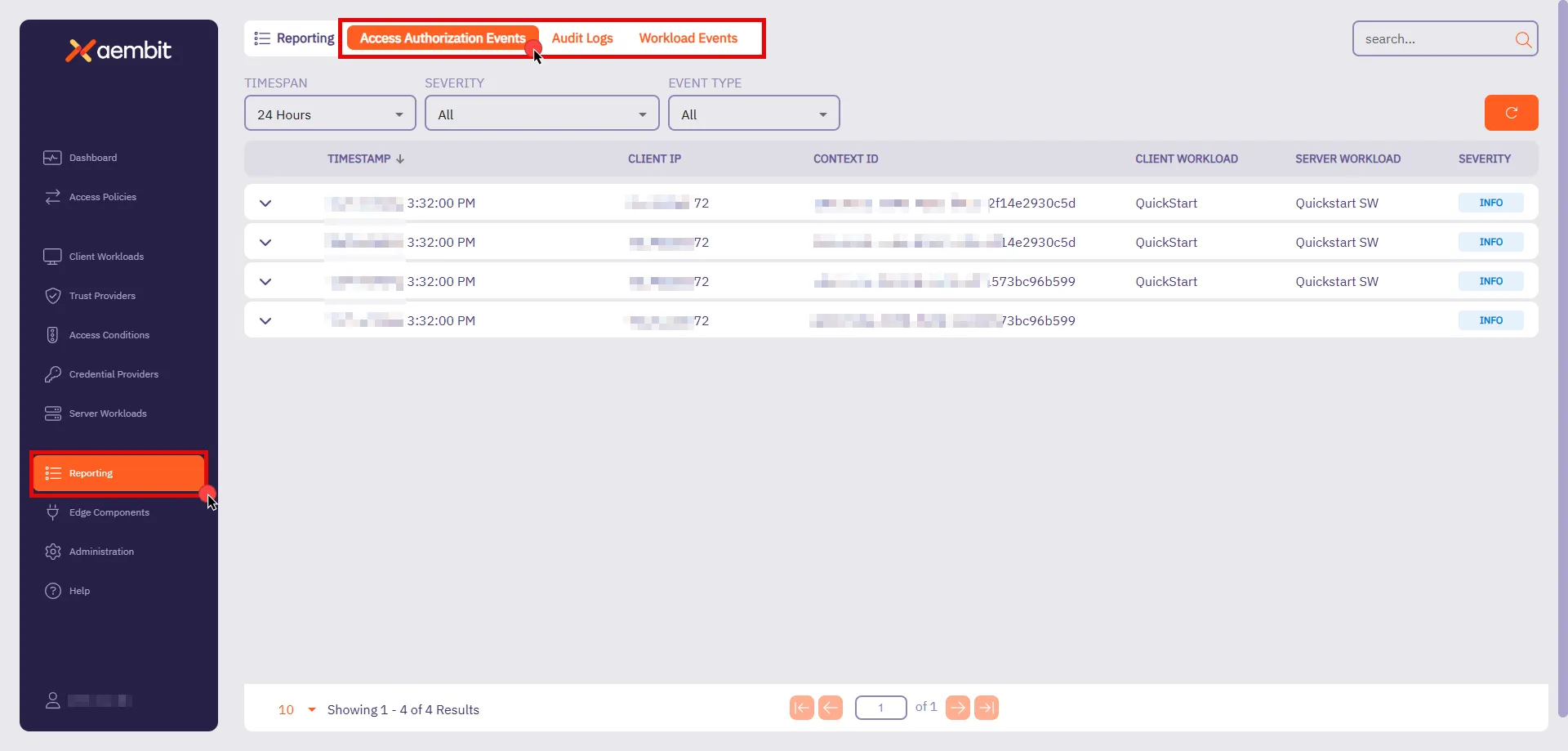

## Access Authorization Event

[Section titled “Access Authorization Event”](#access-authorization-event)

### GET /api/v1/authorization-events

[Section titled “GET /api/v1/authorization-events”](#get-apiv1authorization-events)

**Summary:** Get a page of Access Authorization Events

**Description:** Get a page of Access Authorization Events.

**Operation ID:** get-access-authorization-events

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

* **page** (undefined) *(optional)*: any

* **per-page** (undefined) *(optional)*: any

* **order** (undefined) *(optional)*: any

* **search** (undefined) *(optional)*: any

* **span-last-hours** (undefined) *(optional)*: any

* **severity** (undefined) *(optional)*: any

* **event-type** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Page of Access Authorization Events

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io/api/v1/authorization-events"

```

### GET ‘/api/v1/authorization-events/{id}’

[Section titled “GET ‘/api/v1/authorization-events/{id}’”](#get-apiv1authorization-eventsid)

**Summary:** Get an Access Authorization Event

**Description:** Get an Access Authorization Event identified by its ID.

**Operation ID:** get-access-authorization-event

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Access Authorization Event

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v1/authorization-events/{id}'"

```

## Credential Provider (Deprecated)

[Section titled “Credential Provider (Deprecated)”](#credential-provider-deprecated)

### GET ‘/api/v1/credential-providers/{id}’

[Section titled “GET ‘/api/v1/credential-providers/{id}’”](#get-apiv1credential-providersid)

**Summary:** Get a Credential Provider

**Description:** Get a Credential Provider identified by its ID.

**Operation ID:** get-credential-provider

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Credential Provider

* Content-Type: application/json

* Schema: any

* **‘204’**: Credential Provider Not Found

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v1/credential-providers/{id}'"

```

### DELETE ‘/api/v1/credential-providers/{id}’

[Section titled “DELETE ‘/api/v1/credential-providers/{id}’”](#delete-apiv1credential-providersid)

**Summary:** Delete a Credential Provider

**Description:** Delete a Credential Provider identified by its ID.

**Operation ID:** delete-credential-provider

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘204’**: Deleted Credential Provider

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X DELETE \

"https://your-tenant.aembit.io'/api/v1/credential-providers/{id}'"

```

### PATCH ‘/api/v1/credential-providers/{id}’

[Section titled “PATCH ‘/api/v1/credential-providers/{id}’”](#patch-apiv1credential-providersid)

**Summary:** Patch a Credential Provider

**Description:** Patch a Credential Provider.

**Operation ID:** patch-credential-provider

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** CredentialProviderPatchDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘201’**: Patched Credential Provider

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PATCH \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io'/api/v1/credential-providers/{id}'"

```

### GET ‘/api/v1/credential-providers/{id}/authorize’

[Section titled “GET ‘/api/v1/credential-providers/{id}/authorize’”](#get-apiv1credential-providersidauthorize)

**Summary:** Get a Credential Provider Authorization URL

**Description:** Get a Credential Provider Authorization URL identified by the Credential Provider ID.

**Operation ID:** get-credential-provider-authorization

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘302’**: Redirects to the Credential Provider Authorization URL

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v1/credential-providers/{id}/authorize'"

```

### GET /api/v1/credential-providers

[Section titled “GET /api/v1/credential-providers”](#get-apiv1credential-providers)

**Summary:** Get a page of Credential Providers

**Description:** Get a page of Credential Providers.

**Operation ID:** get-credential-providers

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

* **page** (undefined) *(optional)*: any

* **per-page** (undefined) *(optional)*: any

* **filter** (undefined) *(optional)*: any

* **order** (undefined) *(optional)*: any

* **group-by** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Page of Credential Providers

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io/api/v1/credential-providers"

```

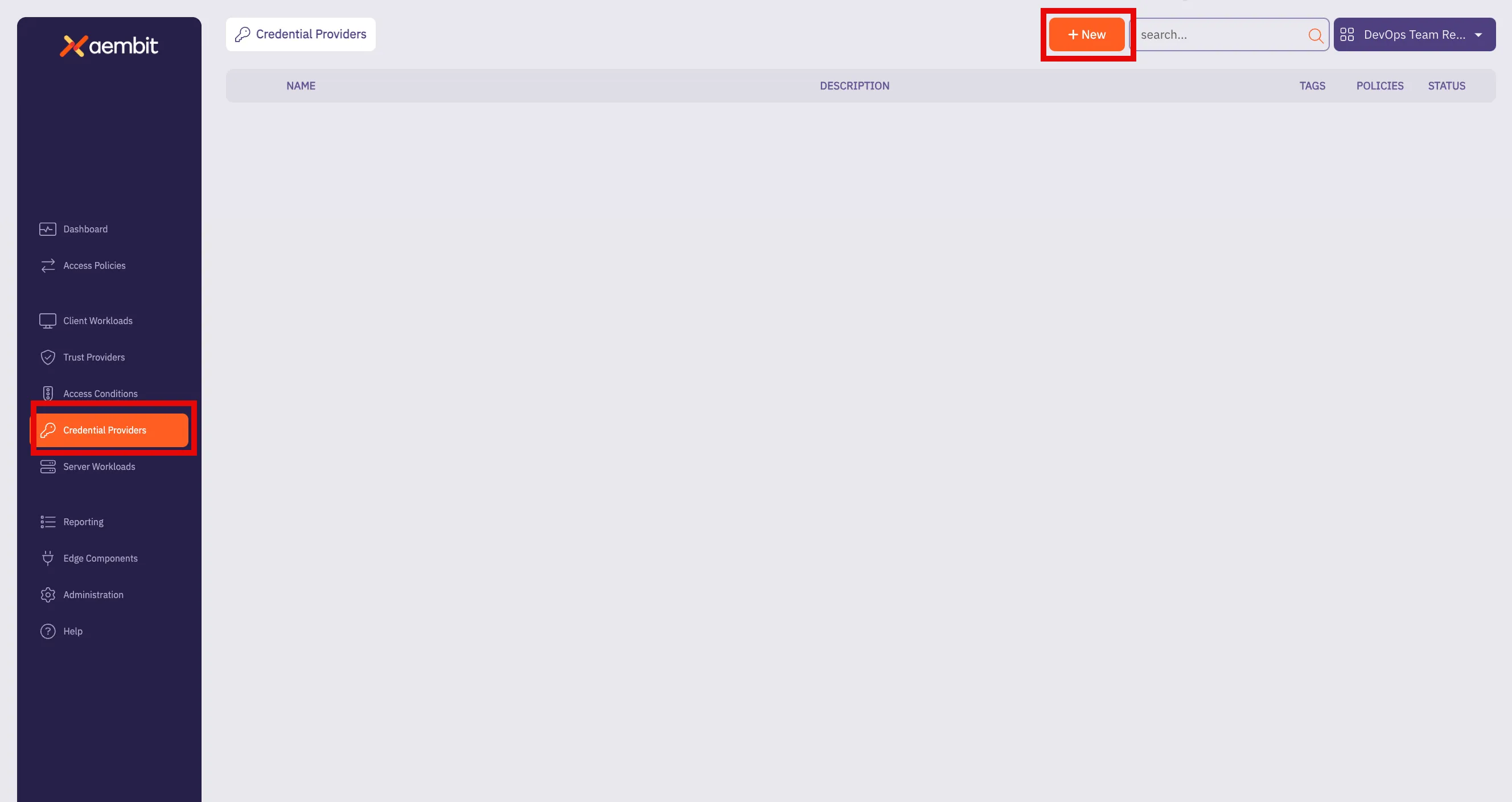

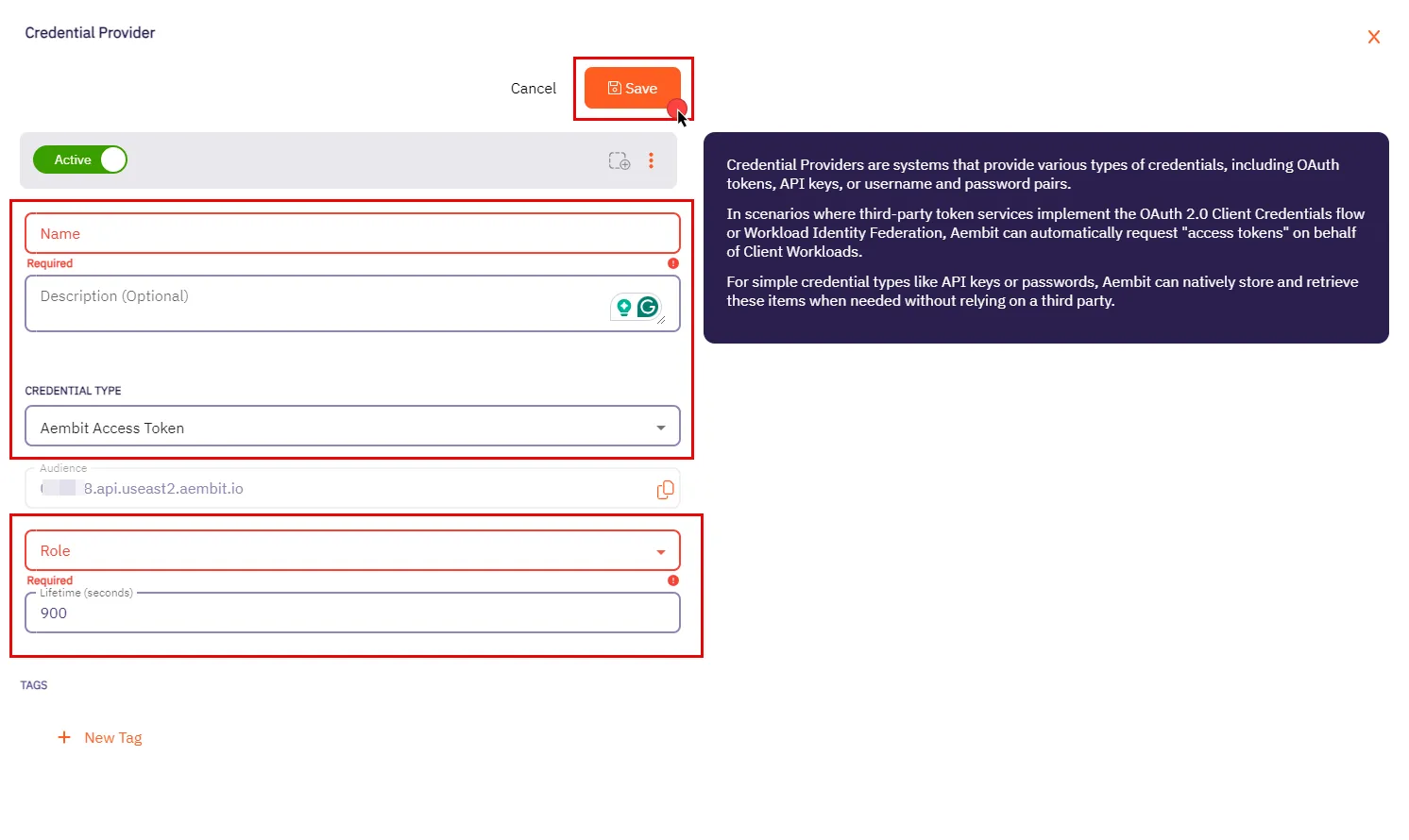

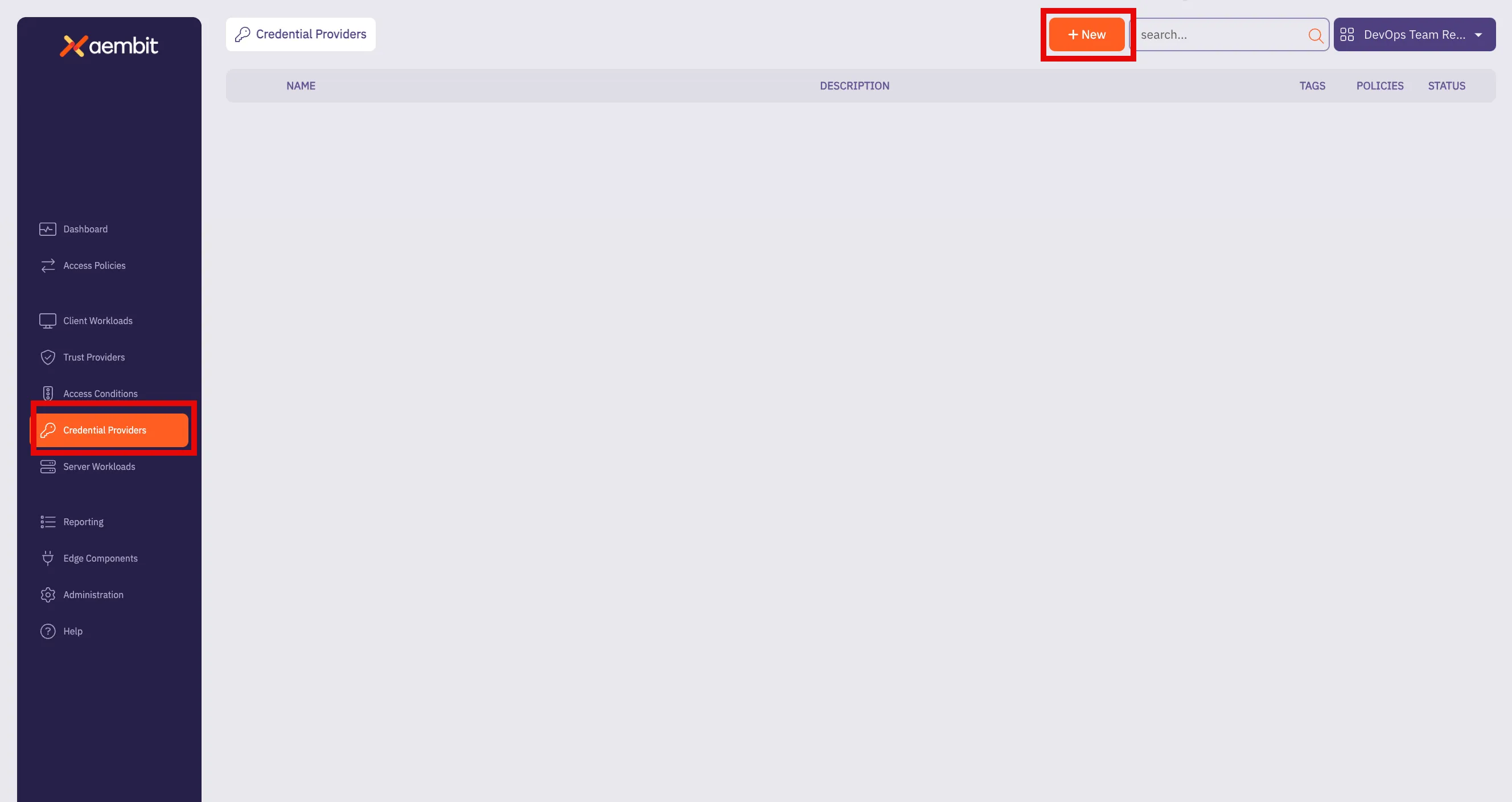

### POST /api/v1/credential-providers

[Section titled “POST /api/v1/credential-providers”](#post-apiv1credential-providers)

**Summary:** Create a Credential Provider

**Description:** Create a Credential Provider.

**Operation ID:** post-credential-provider

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** CredentialProviderDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘201’**: Created Credential Provider

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X POST \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v1/credential-providers"

```

### PUT /api/v1/credential-providers

[Section titled “PUT /api/v1/credential-providers”](#put-apiv1credential-providers)

**Summary:** Update a Credential Provider

**Description:** Update a Credential Provider.

**Operation ID:** put-credential-provider

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** CredentialProviderDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘201’**: Updated Credential Provider

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PUT \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v1/credential-providers"

```

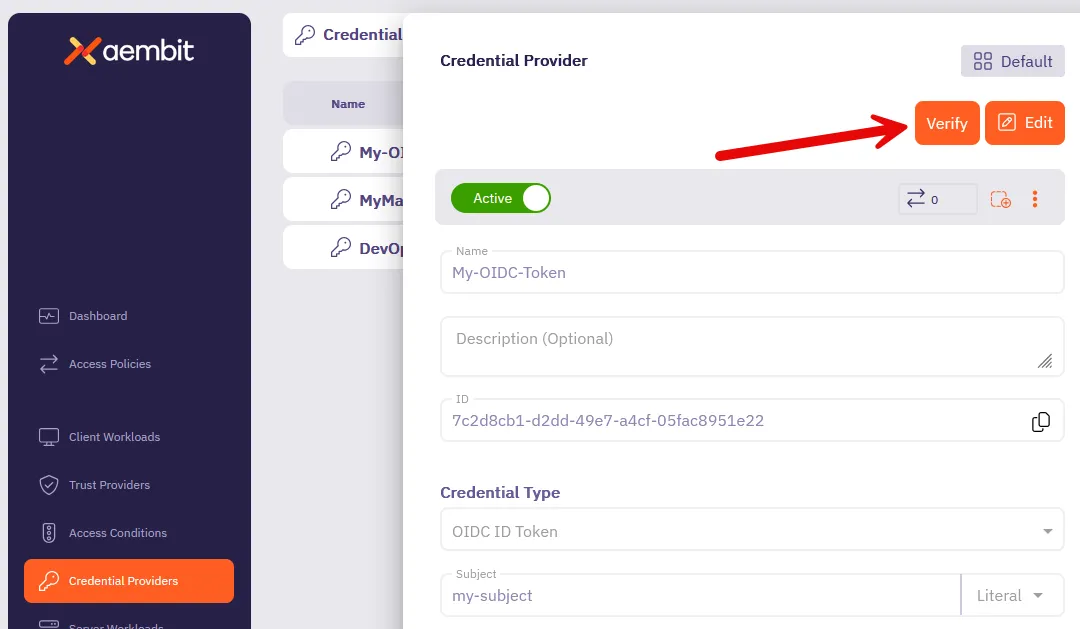

### GET ‘/api/v1/credential-providers/{id}/verification’

[Section titled “GET ‘/api/v1/credential-providers/{id}/verification’”](#get-apiv1credential-providersidverification)

**Summary:** Verify the Credential Provider

**Description:** Verify the Credential Provider will successfully return a credential.

**Operation ID:** get-credential-provider-verification

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Details on the verification of a Credential Provider

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v1/credential-providers/{id}/verification'"

```

## Credential Provider Integration

[Section titled “Credential Provider Integration”](#credential-provider-integration)

### GET ‘/api/v1/credential-integrations/{id}’

[Section titled “GET ‘/api/v1/credential-integrations/{id}’”](#get-apiv1credential-integrationsid)

**Summary:** Get a Credential Provider Integration

**Description:** Get a Credential Provider Integration identified by its ID.

**Operation ID:** get-credential-provider-integration

**Parameters:**

* **id** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Credential Provider Integration

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v1/credential-integrations/{id}'"

```

### DELETE ‘/api/v1/credential-integrations/{id}’

[Section titled “DELETE ‘/api/v1/credential-integrations/{id}’”](#delete-apiv1credential-integrationsid)

**Summary:** Delete a Credential Provider Integration

**Description:** Delete a Credential Provider Integration identified by its ID.

**Operation ID:** delete-credential-provider-integration

**Parameters:**

* **id** (undefined) *(optional)*: any

**Responses:**

* **‘204’**: Successfully deleted Credential Provider Integration

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘404’**: Not Found

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X DELETE \

"https://your-tenant.aembit.io'/api/v1/credential-integrations/{id}'"

```

### PATCH ‘/api/v1/credential-integrations/{id}’

[Section titled “PATCH ‘/api/v1/credential-integrations/{id}’”](#patch-apiv1credential-integrationsid)

**Summary:** Patch a Credential Provider Integration

**Description:** Patch a Credential Provider Integration identified by its ID.

**Operation ID:** patch-credential-provider-integration

**Parameters:**

* **id** (undefined) *(optional)*: any

**Request Body:** CredentialProviderIntegrationPatchDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Patched Credential Provider Integration

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PATCH \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io'/api/v1/credential-integrations/{id}'"

```

### GET /api/v1/credential-integrations

[Section titled “GET /api/v1/credential-integrations”](#get-apiv1credential-integrations)

**Summary:** Get a page of Credential Provider Integrations

**Description:** Get a page of Credential Provider Integrations.

**Operation ID:** get-credential-provider-integrations

**Parameters:**

* **page** (undefined) *(optional)*: any

* **per-page** (undefined) *(optional)*: any

* **filter** (undefined) *(optional)*: any

* **order** (undefined) *(optional)*: any

* **group-by** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Page of Credential Provider Integrations

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io/api/v1/credential-integrations"

```

### POST /api/v1/credential-integrations

[Section titled “POST /api/v1/credential-integrations”](#post-apiv1credential-integrations)

**Summary:** Create a Credential Provider Integration

**Description:** Create a Credential Provider Integration.

**Operation ID:** post-credential-provider-integration

**Request Body:** CredentialProviderIntegrationDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘201’**: Created Credential Provider Integration

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X POST \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v1/credential-integrations"

```

### PUT /api/v1/credential-integrations

[Section titled “PUT /api/v1/credential-integrations”](#put-apiv1credential-integrations)

**Summary:** Update a Credential Provider Integration

**Description:** Update a Credential Provider Integration.

**Operation ID:** put-credential-provider-integration

**Request Body:** CredentialProviderIntegrationDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Updated Credential Provider Integration

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘404’**: Not Found

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PUT \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v1/credential-integrations"

```

## Credential Provider v2

[Section titled “Credential Provider v2”](#credential-provider-v2)

### POST /api/v2/credential-providers

[Section titled “POST /api/v2/credential-providers”](#post-apiv2credential-providers)

**Summary:** Create a Credential Provider

**Description:** Create a Credential Provider.

**Operation ID:** post-credential-provider2

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** CredentialProviderV2DTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘201’**: Created Credential Provider

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X POST \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v2/credential-providers"

```

### PUT /api/v2/credential-providers

[Section titled “PUT /api/v2/credential-providers”](#put-apiv2credential-providers)

**Summary:** Update a Credential Provider

**Description:** Update a Credential Provider.

**Operation ID:** put-credential-provider2

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** CredentialProviderV2DTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Updated Credential Provider

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PUT \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v2/credential-providers"

```

### GET /api/v2/credential-providers

[Section titled “GET /api/v2/credential-providers”](#get-apiv2credential-providers)

**Summary:** Get a page of Credential Providers

**Description:** Get a page of Credential Providers.

**Operation ID:** get-credential-providers-v2

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

* **page** (undefined) *(optional)*: any

* **per-page** (undefined) *(optional)*: any

* **filter** (undefined) *(optional)*: any

* **order** (undefined) *(optional)*: any

* **group-by** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Page of Credential Providers

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io/api/v2/credential-providers"

```

### GET ‘/api/v2/credential-providers/{id}’

[Section titled “GET ‘/api/v2/credential-providers/{id}’”](#get-apiv2credential-providersid)

**Summary:** Get a Credential Provider

**Description:** Get a Credential Provider identified by its ID.

**Operation ID:** get-credential-provider2

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Credential Provider

* Content-Type: application/json

* Schema: any

* **‘204’**: Credential Provider Not Found

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v2/credential-providers/{id}'"

```

### DELETE ‘/api/v2/credential-providers/{id}’

[Section titled “DELETE ‘/api/v2/credential-providers/{id}’”](#delete-apiv2credential-providersid)

**Summary:** Delete a Credential Provider

**Description:** Delete a Credential Provider identified by its ID.

**Operation ID:** delete-credential-provider2

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘204’**: Deleted Credential Provider

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘404’**: Not Found

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X DELETE \

"https://your-tenant.aembit.io'/api/v2/credential-providers/{id}'"

```

### PATCH ‘/api/v2/credential-providers/{id}’

[Section titled “PATCH ‘/api/v2/credential-providers/{id}’”](#patch-apiv2credential-providersid)

**Summary:** Patch a Credential Provider

**Description:** Patch a Credential Provider.

**Operation ID:** patch-credential-provider-v2

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** CredentialProviderPatchDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Patched Credential Provider

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PATCH \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io'/api/v2/credential-providers/{id}'"

```

### GET ‘/api/v2/credential-providers/{id}/verification’

[Section titled “GET ‘/api/v2/credential-providers/{id}/verification’”](#get-apiv2credential-providersidverification)

**Summary:** Verify the Credential Provider

**Description:** Verify the Credential Provider will successfully return a credential.

**Operation ID:** get-credential-provider-verification-v2

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Details on the verification of a Credential Provider

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v2/credential-providers/{id}/verification'"

```

### GET ‘/api/v2/credential-providers/{id}/authorize’

[Section titled “GET ‘/api/v2/credential-providers/{id}/authorize’”](#get-apiv2credential-providersidauthorize)

**Summary:** Get a Credential Provider Authorization URL

**Description:** Get a Credential Provider Authorization URL identified by the Credential Provider ID.

**Operation ID:** get-credential-provider-authorization-v2

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘302’**: Redirects to the Credential Provider Authorization URL

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v2/credential-providers/{id}/authorize'"

```

## DiscoveryIntegration

[Section titled “DiscoveryIntegration”](#discoveryintegration)

### GET /api/v1/discovery-integrations

[Section titled “GET /api/v1/discovery-integrations”](#get-apiv1discovery-integrations)

**Summary:** Get a page of Integrations

**Description:** Get a page of Integrations.

**Operation ID:** get-discovery-integrations

**Parameters:**

* **page** (undefined) *(optional)*: any

* **per-page** (undefined) *(optional)*: any

* **filter** (undefined) *(optional)*: any

* **order** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Page of Integrations

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io/api/v1/discovery-integrations"

```

### POST /api/v1/discovery-integrations

[Section titled “POST /api/v1/discovery-integrations”](#post-apiv1discovery-integrations)

**Summary:** Create an Integration

**Description:** Create an Integration.

**Operation ID:** post-discovery-integration

**Request Body:** DiscoveryIntegrationDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘201’**: Created Integration

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X POST \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v1/discovery-integrations"

```

### PUT /api/v1/discovery-integrations

[Section titled “PUT /api/v1/discovery-integrations”](#put-apiv1discovery-integrations)

**Summary:** Update an Integration

**Description:** Update an Integration.

**Operation ID:** put-discovery-integration

**Request Body:** DiscoveryIntegrationDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Updated Integration

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PUT \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v1/discovery-integrations"

```

### GET ‘/api/v1/discovery-integrations/{id}’

[Section titled “GET ‘/api/v1/discovery-integrations/{id}’”](#get-apiv1discovery-integrationsid)

**Summary:** Get an Integration

**Description:** Get an Integration.

**Operation ID:** get-discovery-integration

**Parameters:**

* **id** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Integration

* Content-Type: application/json

* Schema: any

* **‘204’**: Integration Not Found

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v1/discovery-integrations/{id}'"

```

### DELETE ‘/api/v1/discovery-integrations/{id}’

[Section titled “DELETE ‘/api/v1/discovery-integrations/{id}’”](#delete-apiv1discovery-integrationsid)

**Summary:** Delete an Integration

**Description:** Delete an Integration as identified by its ID.

**Operation ID:** delete-discovery-integration

**Parameters:**

* **id** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Successfully deleted Integration

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X DELETE \

"https://your-tenant.aembit.io'/api/v1/discovery-integrations/{id}'"

```

### PATCH ‘/api/v1/discovery-integrations/{id}’

[Section titled “PATCH ‘/api/v1/discovery-integrations/{id}’”](#patch-apiv1discovery-integrationsid)

**Summary:** Patch an Integration

**Description:** Patch an Integration as identified by its ID.

**Operation ID:** patch-discovery-integration

**Parameters:**

* **id** (undefined) *(optional)*: any

**Request Body:** DiscoveryIntegrationPatchDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Patched Integration

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PATCH \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io'/api/v1/discovery-integrations/{id}'"

```

## Workload Event

[Section titled “Workload Event”](#workload-event)

### GET /api/v1/workload-events

[Section titled “GET /api/v1/workload-events”](#get-apiv1workload-events)

**Summary:** Get a page of Workload Events

**Description:** Get a page of Workload Events.

**Operation ID:** get-workload-events

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

* **page** (undefined) *(optional)*: any

* **per-page** (undefined) *(optional)*: any

* **order** (undefined) *(optional)*: any

* **search** (undefined) *(optional)*: any

* **span-last-hours** (undefined) *(optional)*: any

* **application-protocol** (undefined) *(optional)*: any

* **severity** (undefined) *(optional)*: any

* **source-workload** (undefined) *(optional)*: any

* **target-workload** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Page of Workload Events

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io/api/v1/workload-events"

```

### GET ‘/api/v1/workload-events/{id}’

[Section titled “GET ‘/api/v1/workload-events/{id}’”](#get-apiv1workload-eventsid)

**Summary:** Get a Workload Event

**Description:** Get a Workload Event.

**Operation ID:** get-workload-event

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Workload Event

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v1/workload-events/{id}'"

```

## Health

[Section titled “Health”](#health)

### GET /api/v1/health

[Section titled “GET /api/v1/health”](#get-apiv1health)

**Summary:** Aembit Cloud API Health

**Description:** Get the health of the Aembit Cloud API.

**Operation ID:** get-health

**Responses:**

* **‘200’**: API Health

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io/api/v1/health"

```

## Integration

[Section titled “Integration”](#integration)

### GET /api/v1/integrations

[Section titled “GET /api/v1/integrations”](#get-apiv1integrations)

**Summary:** Get a page of Integrations

**Description:** Get a page of Integrations.

**Operation ID:** get-integrations

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

* **page** (undefined) *(optional)*: any

* **per-page** (undefined) *(optional)*: any

* **filter** (undefined) *(optional)*: any

* **order** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Page of Integrations

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io/api/v1/integrations"

```

### POST /api/v1/integrations

[Section titled “POST /api/v1/integrations”](#post-apiv1integrations)

**Summary:** Create an Integration

**Description:** Create an Integration.

**Operation ID:** post-integration

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** IntegrationDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘201’**: Created Integration

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X POST \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v1/integrations"

```

### PUT /api/v1/integrations

[Section titled “PUT /api/v1/integrations”](#put-apiv1integrations)

**Summary:** Update an Integration

**Description:** Update an Integration.

**Operation ID:** put-integration

**Parameters:**

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** IntegrationDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Updated Integration

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X PUT \

-H "Content-Type: application/json" \

-d '{"example": "data"}' \

"https://your-tenant.aembit.io/api/v1/integrations"

```

### GET ‘/api/v1/integrations/{id}’

[Section titled “GET ‘/api/v1/integrations/{id}’”](#get-apiv1integrationsid)

**Summary:** Get an Integration

**Description:** Get an Integration.

**Operation ID:** get-integration

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Integration

* Content-Type: application/json

* Schema: any

* **‘204’**: Integration Not Found

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X GET \

"https://your-tenant.aembit.io'/api/v1/integrations/{id}'"

```

### DELETE ‘/api/v1/integrations/{id}’

[Section titled “DELETE ‘/api/v1/integrations/{id}’”](#delete-apiv1integrationsid)

**Summary:** Delete an Integration

**Description:** Delete an Integration as identified by its ID.

**Operation ID:** delete-integration

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Responses:**

* **‘200’**: Successfully deleted Integration

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash

curl -X DELETE \

"https://your-tenant.aembit.io'/api/v1/integrations/{id}'"

```

### PATCH ‘/api/v1/integrations/{id}’

[Section titled “PATCH ‘/api/v1/integrations/{id}’”](#patch-apiv1integrationsid)

**Summary:** Patch an Integration

**Description:** Patch an Integration as identified by its ID.

**Operation ID:** patch-integration

**Parameters:**

* **id** (undefined) *(optional)*: any

* **X-Aembit-ResourceSet** (undefined) *(optional)*: any

**Request Body:** IntegrationPatchDTO

* Content-Type: application/json

* Schema: any

**Responses:**

* **‘200’**: Patched Integration

* Content-Type: application/json

* Schema: any

* **‘400’**: Bad Request

* **‘401’**: Not Authenticated

* **‘500’**: Internal Server Error

* Content-Type: application/json

* Schema: any

**cURL Example:**

```bash